| CS631p

Human Computer Interaction |

| Spring

2001 |

| Lecture

3 |

Using Video to Prototype User Interfaces

Laurie Vertelney

How to decide what interfaces to build:

-

Specify what tasks the interface will enable.

Begin with:

-

User Interface Engineering Requirements Specification

-

Lists of Functions and Commands

-

Scenarios or Stories

-

Design how users will interact with technology to accomplish

task.

How do you prototype user interface ideas?

Build First?

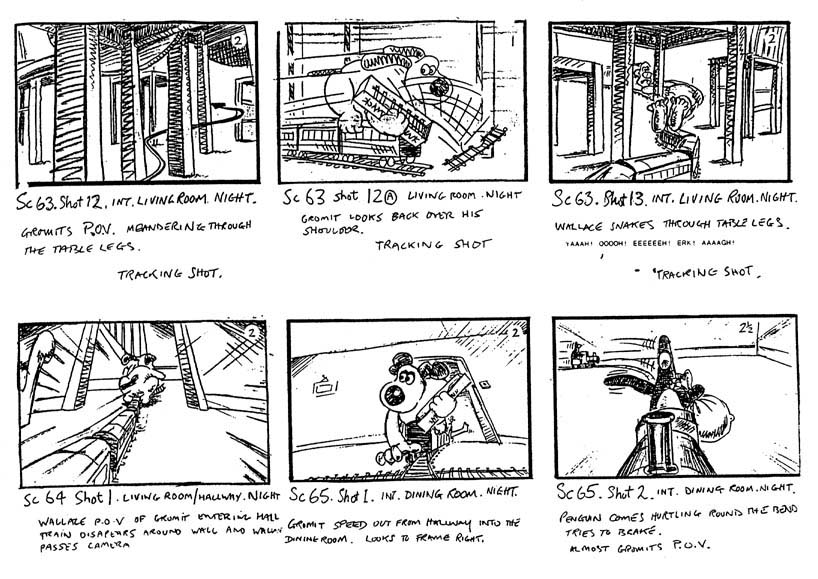

Develop Storyboards and Flipbooks:

Used by designers to:

-

- work out timing and sequence problems

-

- record design solutions

Flipbooks

-

- Used by animators

-

- One picture per page

-

- Pages fanned

Storyboards

- 8 or 12 drawings per page with supporting text in a

window below drawing

- describes for animations and films:

-

camera shots

-

actions

-

timing events

Apply to Interface Design via Prototypes

-

Animated Prototypes

-

Animated Drawing

-

Make sketch of interface

-

Photocopy sketch to make multiple copies

-

Draw atop copies to show interaction events as in-betweens

-

Place drawings in sequential order under video camera (shoot

1 drawing for 10 to 20 sec.)

-

Edit video.

-

Cutout Animation

-

Objects cut from paper or cardboard and place atop background

-

Film as they move under video camera

-

Edit video

-

Animated Objects

-

Three dimensional objects

-

Move under camera with magnets or fishing line

-

Computer Animation

-

Cell Animation

-

Computer Scripting Interactive Prototypes

-

Hypercard

-

Shockwave

-

Mixed Media

Advantages:

-

- Provides entire range of video expression

-

- Good communication tool

-

- No programming

Disadvantages:

- Expensive

- Difficult to simulate

- Not Interactive

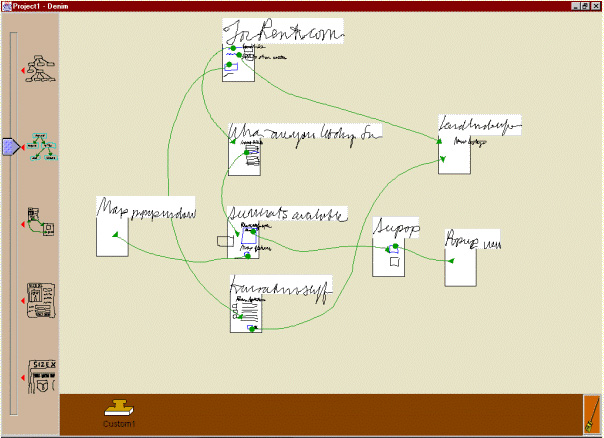

Sample Storyboard Software:

Methodology Matters: Doing Research in

the Behavioral and Social Sciences

Methodology Matters: Doing Research in

the Behavioral and Social Sciences

Joseph McGraph

Basic Features of Research Process

-

Some context that is of interest

-

Some ideas that give meaning to that context

-

Some techniques or procedures by means of which those ideas

and contexts can be studied

Research deals with several levels of phenomena: relations

between

elements within a context

Three domains:

-

Substantive domain - elements of phenomena and relations

among them

Involves:

-

States of actions among human systems

-

Conditions and processes that follow from those states and

actions

-

Conceptual domain - properties of states and actions

with connections

-

Causal connections

-

Logical relations

-

Chronological relations

-

Methodological domain

-

Modes of treatment

-

Techniques of measuring

-

Questionnaire

-

Rating scale

-

Personality test

-

Instruments for observing and recording communications

-

Techniques for assessing quality of "products"

-

Techniques for manipulating

-

Giving instruction

-

Imposing constraints

-

Selecting materials

-

Giving feedback

-

Using experimental confederates

-

Techniques for controlling

-

Experimental control

-

Statistical control

-

Distributing the impact (Randomization)

-

Relations - applications of various comparison techniques

-

Used to assess relations among two or more features of human

system

-

Comparisons involve three sets of features of the systems

under study:

-

Features that have been measured (dependent variables)

-

Features that have been measured or manipulated (independent

variables)

-

All other features of system

-

Comparisons assess co-variables or association between independent

and dependent variables

Research methods as opportunities and

limitations

Dual nature of methods:

-

opportunities for gaining knowledge

-

limitations to that knowledge

e.g. questionnaire - flawed

e.g. direct observation - impossible?

All methods flawed - must minimize flaws

Summary:

-

Methods enable but limit evidence

-

All methods are valuable, but all have weaknesses

or limitations

-

Offset weakness of one method by using multiple methods

-

Select methods whose strengths offset weaknesses of others

Fundamental principle in behavioral or

social sciences:

Credible empirical knowledge requires consistency or

convergence of evidence across studies based on different methods

Research strategies: Choosing

a setting for a study

Research evidence - somebody doing something in some

setting

What we can ask:

-

Who [which actors] - human systems

-

What [which behaviors] - states and actions

-

When and where [which contexts] - all features of surroundings

Maximize three criteria:

-

Generalizability of evidence over actors

-

Precision of measurement of actions

-

Realism of context

Can not maximize all three simultaneously

- kind of like the uncertainty principle

Increasing one reduces other:

e.g. increase precision - extending control of

variables reduces realism

e.g. field study - increases naturalness reduces precision

Study Design, Comparison Techniques, and

Validity

-

Data collection

-

Aggregation and partition

-

Comparisons made

Comparison techniques: Assessing associations

and differences

-

Base rates: How often does Y occur, given X

-

Correlation: Are X and Y related

Is there a systematic co-variation in the values of two

or more properties of the system?

Or

Do values of X covary with values of Y?

-

Difference

Whether Y is present under conditions when X is present?

Randomization and "True Experiments"

Randomly assign cases to conditions

Sampling, Allocation, and Statistical Inference

-

Statistical analysis (e.g. coin toss)

-

Probability

Validity of findings

-

Internal validity - How close you can say that X varied Y

- must be able to rule out other plausible hypotheses

-

External validity - How well your findings will hold-up upon

replication

-

Threats to validity - plausible rival hypotheses

-

Construct validity - How good is theory

Classes of Measures and Manipulation Techniques

-

Kinds of data collection methods:

-

Self reports or questionnaires

-

Observations (visible vs. hidden observer)

-

Archival records - material already exists

-

Trace measures - behavior itself leaves trace

(All have strengths and weaknesses)

-

Techniques for manipulating variables

-

Selecting cases with desired values and allocating them to

appropriate conditions of study

-

Direct intervention in systems under study

-

Indirect inclusion of variables

Three approaches to manipulation:

-

Selection - directly select a choice

-

Direct Intervention - set up test cases

-

Inductions

- Misleading instruction

- False feedback

- Experimental confederates

Usability

Inspection Methods

Mack & Nielsen

Usability Inspection - evaluators

examine usability of related aspects of UI

Characteristic - reliance on judgment as source of evaluator's

feedback

Four ways of evaluating UI:

-

Automatically (run through software)

-

Empirically (testing interface on real people)

-

Formally (using exact models or formulas)

-

Informally (based on rules-of-thumb) <- Usability Inspection

Best testing: empirical and informal

Inspection Objectives

Inspection occurs when design has been created and utility

toward users needs to be evaluated

Concerned with classifying and counting number of usability

problems.

What is a problem? - any change in design that leads

to improved system measures

Usability engineering lifecycle - problems - design team

must redesign UI which means further analysis, design, costing

Inspection Methods

-

Heuristic evaluation - most informal - specialists judge

whether each dialogue conforms to principles

-

Guidelines reviews - cross between heuristic evaluation and

standards inspection

-

Pluralistic walkthroughs - meetings where users, developers,

and human factors people step through a scenario

-

Consistency inspections - designer representing multiple

projects inspect an interface

-

Standards inspections

-

Cognitive walkthroughs - detailed procedure to simulate a

user's problem solving process

-

Formal usability inspection - like formal code inspection

(moderator, scribe, inspectors, etc)

-

Feature inspection - looking for functions