Chapter 11. Distributed File

Systems

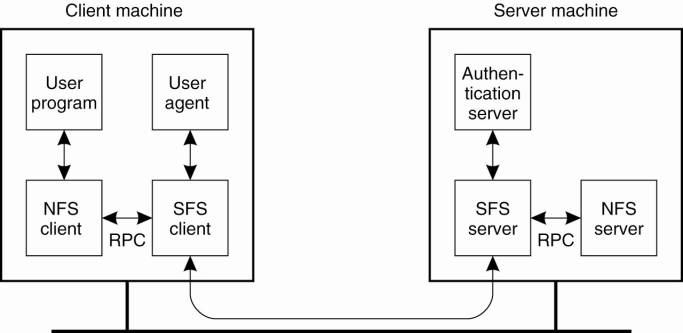

Considering that sharing

data is fundamental to distributed systems, it is not surprising that

distributed file systems form the basis for many distributed applications.

Distributed file systems allow multiple processes to share data over long

periods of time in a secure and reliable way. As such, they have been used as

the basic layer for distributed systems and applications. In this chapter, we

consider distributed file systems as a paradigm for general-purpose distributed

systems.

11.1. Architecture

We start our discussion on

distributed file systems by looking at how they are generally organized. Most

systems are built following a traditional client-server architecture, but fully

decentralized solutions exist as well. In the following, we will take a look at

both kinds of organizations.

11.1.1. Client-Server

Architectures

Many distributed files

systems are organized along the lines of client-server architectures, with Sun

Microsystem's Network File System (NFS) being one of the most widely-deployed

ones for UNIX-based systems. We will take NFS as a canonical example for

server-based distributed file systems throughout this chapter. In particular,

we concentrate on NFSv3, the widely-used third version of NFS (Callaghan, 2000)

and NFSv4, the most recent, fourth version (Shepler et al., 2003). We will

discuss the differences between them as well.

[Page 492]

The basic idea behind NFS is

that each file server provides a standardized view of its local file system. In

other words, it should not matter how that local file system is implemented;

each NFS server supports the same model. This approach has been adopted for

other distributed files systems as well. NFS comes with a communication

protocol that allows clients to access the files stored on a server, thus allowing

a heterogeneous collection of processes, possibly running on different

operating systems and machines, to share a common file system.

The model underlying NFS and

similar systems is that of a remote file service. In this model, clients are

offered transparent access to a file system that is managed by a remote server.

However, clients are normally unaware of the actual location of files. Instead,

they are offered an interface to a file system that is similar to the interface

offered by a conventional local file system. In particular, the client is

offered only an interface containing various file operations, but the server is

responsible for implementing those operations. This model is therefore also

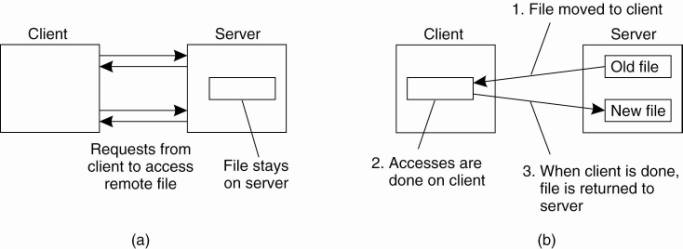

referred to as the remote access model. It is shown in Fig. 11-1(a).

Figure 11-1. (a) The remote

access model. (b) The upload/download model.

In contrast, in the

upload/download model a client accesses a file locally after having downloaded

it from the server, as shown in Fig. 11-1(b). When the client is finished with

the file, it is uploaded back to the server again so that it can be used by

another client. The Internet's FTP service can be used this way when a client

downloads a complete file, modifies it, and then puts it back.

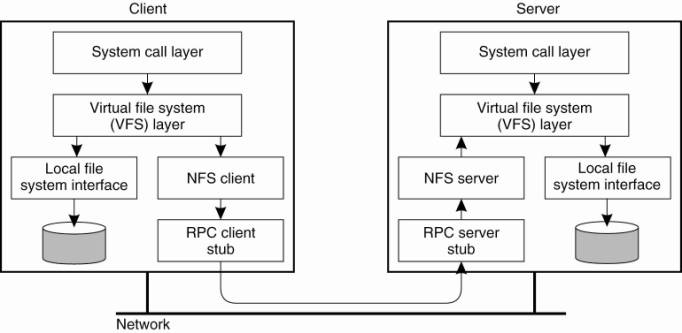

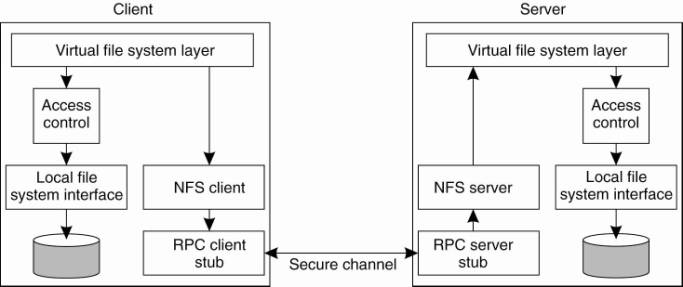

NFS has been implemented for

a large number of different operating systems, although the UNIX-based versions

are predominant. For virtually all modern UNIX systems, NFS is generally

implemented following the layered architecture shown in Fig. 11-2.

Figure 11-2. The basic NFS

architecture for UNIX systems.

(This item is displayed on

page 493 in the print version)

A client accesses the file

system using the system calls provided by its local operating system. However,

the local UNIX file system interface is replaced by an interface to the Virtual

File System (VFS), which by now is a de facto standard for interfacing to

different (distributed) file systems (Kleiman, 1986). Virtually all modern

operating systems provide VFS, and not doing so more or less forces developers

to largely reimplement huge of an operating system when adopting a new

file-system structure. With NFS, operations on the VFS interface are either

passed to a local file system, or passed to a separate component known as the

NFS client, which takes care of handling access to files stored at a remote

server. In NFS, all client-server communication is done through RPCs. The NFS

client implements the NFS file system operations as RPCs to the server. Note

that the operations offered by the VFS interface can be different from those

offered by the NFS client. The whole idea of the VFS is to hide the differences

between various file systems.

[Page 493]

On the server side, we see a

similar organization. The NFS server is responsible for handling incoming

client requests. The RPC stub unmarshals requests and the NFS server converts

them to regular VFS file operations that are subsequently passed to the VFS

layer. Again, the VFS is responsible for implementing a local file system in

which the actual files are stored.

An important advantage of

this scheme is that NFS is largely independent of local file systems. In

principle, it really does not matter whether the operating system at the client

or server implements a UNIX file system, a Windows 2000 file system, or even an

old MS-DOS file system. The only important issue is that these file systems are

compliant with the file system model offered by NFS. For example, MS-DOS with

its short file names cannot be used to implement an NFS server in a fully

transparent way.

File System Model

The file system model

offered by NFS is almost the same as the one offered by UNIX-based systems.

Files are treated as uninterpreted sequences of bytes. They are hierarchically

organized into a naming graph in which nodes represent directories and files.

NFS also supports hard links as well as symbolic links, like any UNIX file

system. Files are named, but are otherwise accessed by means of a UNIX-like

file handle, which we discuss in detail below. In other words, to access a

file, a client must first look up its name in a naming service and obtain the

associated file handle. Furthermore, each file has a number of attributes whose

values can be looked up and changed. We return to file naming in detail later

in this chapter.

Fig. 11-3 shows the general

file operations supported by NFS versions 3 and 4, respectively. The create operation

is used to create a file, but has somewhat different meanings in NFSv3 and

NFSv4. In version 3, the operation is used for creating regular files. Special

files are created using separate operations. The link operation is used to

create hard links. Symlink is used to create symbolic links. Mkdir is used to

create subdirectories. Special files, such as device files, sockets, and named

pipes are created by means of the mknod operation.

Figure 11-3. An incomplete

list of file system operations supported by NFS.

(This item is displayed on

page 495 in the print version)

|

Operation |

v3 |

v4 |

Description |

|

Create |

Yes |

No |

Create a regular file |

|

Create |

No |

Yes |

Create a nonregular file |

|

Link |

Yes |

Yes |

Create a hard link to a

file |

|

Symlink |

Yes |

No |

Create a symbolic link to

a file |

|

Mkdir |

Yes |

No |

Create a subdirectory in a

given directory |

|

Mknod |

Yes |

No |

Create a special file |

|

Rename |

Yes |

Yes |

Change the name of a file |

|

Remove |

Yes |

Yes |

Remove a file from a file

system |

|

Rmdir |

Yes |

No |

Remove an empty

subdirectory from a directory |

|

Open |

No |

Yes |

Open a file |

|

Close |

No |

Yes |

Close a file |

|

Lookup |

Yes |

Yes |

Look up a file by means of

a file name |

|

Readdir |

Yes |

Yes |

Read the entries in a

directory |

|

Readlink |

Yes |

Yes |

Read the path name stored

in a symbolic link |

|

Getattr |

Yes |

Yes |

Get the attribute values for

a file |

|

Setattr |

Yes |

Yes |

Set one or more attribute

values for a file |

|

Read |

Yes |

Yes |

Read the data contained in

a file |

|

Write |

Yes |

Yes |

Write data to a file |

This situation is changed

completely in NFSv4, where create is used for creating nonregular files, which

include symbolic links, directories, and special files. Hard links are still

created using a separate link operation, but regular files are created by means

of the open operation, which is new to NFS and is a major deviation from the

approach to file handling in older versions. Up until version 4, NFS was

designed to allow its file servers to be stateless. For reasons we discuss

later in this chapter, this design criterion has been abandoned in NFSv4, in

which it is assumed that servers will generally maintain state between

operations on the same file.

The operation rename is used

to change the name of an existing file the same as in UNIX.

Files are deleted by means

of the remove operation. In version 4, this operation is used to remove any

kind of file. In previous versions, a separate rmdir operation was needed to

remove a subdirectory. A file is removed by its name and has the effect that

the number of hard links to it is decreased by one. If the number of links

drops to zero, the file may be destroyed.

Version 4 allows clients to

open and close (regular) files. Opening a nonexisting file has the side effect

that a new file is created. To open a file, a client provides a name, along

with various values for attributes. For example, a client may specify that a

file should be opened for write access. After a file has been successfully

opened, a client can access that file by means of its file handle. That handle

is also used to close the file, by which the client tells the server that it

will no longer need to have access to the file. The server, in turn, can

release any state it maintained to provide that client access to the file.

[Page 495]

The lookup operation is used

to look up a file handle for a given path name. In NFSv3, the lookup operation

will not resolve a name beyond a mount point. (Recall from Chap. 5 that a mount

point is a directory that essentially represents a link to a subdirectory in a

foreign name space.) For example, assume that the name /remote/vu refers to a

mount point in a naming graph. When resolving the name /remote/vu/mbox, the

lookup operation in NFSv3 will return the file handle for the mount point

/remote/vu along with the remainder of the path name (i.e., mbox). The client

is then required to explicitly mount the file system that is needed to complete

the name lookup. A file system in this context is the collection of files,

attributes, directories, and data blocks that are jointly implemented as a

logical block device (Tanenbaum and Woodhull, 2006).

In version 4, matters have

been simplified. In this case, lookup will attempt to resolve the entire name,

even if this means crossing mount points. Note that this approach is possible

only if a file system has already been mounted at mount points. The client is

able to detect that a mount point has been crossed by inspecting the file

system identifier that is later returned when the lookup completes.

There is a separate

operation readdir to read the entries in a directory. This operation returns a

list of (name, file handle) pairs along with attribute values that the client

requested. The client can also specify how many entries should be returned. The

operation returns an offset that can be used in a subsequent call to readdir in

order to read the next series of entries.

[Page 496]

Operation readlink is used

to read the data associated with a symbolic link. Normally, this data

corresponds to a path name that can be subsequently looked up. Note that the

lookup operation cannot handle symbolic links. Instead, when a symbolic link is

reached, name resolution stops and the client is required to first call

readlink to find out where name resolution should continue.

Files have various

attributes associated with them. Again, there are important differences between

NFS version 3 and 4, which we discuss in detail later. Typical attributes

include the type of the file (telling whether we are dealing with a directory,

a symbolic link, a special file, etc.), the file length, the identifier of the

file system that contains the file, and the last time the file was modified.

File attributes can be read and set using the operations getattr and setattr,

respectively.

Finally, there are

operations for reading data from a file, and writing data to a file. Reading

data by means of the operation read is completely straightforward. The client

specifies the offset and the number of bytes to be read. The client is returned

the actual number of bytes that have been read, along with additional status

information (e.g., whether the end-of-file has been reached).

Writing data to a file is

done using the write operation. The client again specifies the position in the

file where writing should start, the number of bytes to be written, and the

data. In addition, it can instruct the server to ensure that all data are to be

written to stable storage (we discussed stable storage in Chap. 8). NFS servers

are required to support storage devices that can survive power supply failures,

operating system failures, and hardware failures.

11.1.2. Cluster-Based

Distributed File Systems

NFS is a typical example for

many distributed file systems, which are generally organized according to a

traditional client-server architecture. This architecture is often enhanced for

server clusters with a few differences.

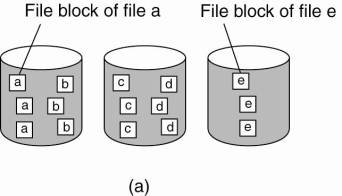

Considering that server

clusters are often used for parallel applications, it is not surprising that

their associated file systems are adjusted accordingly. One well-known

technique is to deploy file-striping techniques, by which a single file is

distributed across multiple servers. The basic idea is simple: by distributing

a large file across multiple servers, it becomes possible to fetch different

parts in parallel. Of course, such an organization works well only if the

application is organized in such a way that parallel data access makes sense.

This generally requires that the data as stored in the file have a very regular

structure, for example, a (dense) matrix.

For general-purpose

applications, or those with irregular or many different types of data

structures, file striping may not be an effective tool. In those cases, it is

often more convenient to partition the file system as a whole and simply store

different files on different servers, but not to partition a single file across

multiple servers. The difference between these two approaches is shown in Fig.

11-4.

[Page 497]

Figure 11-4. The difference

between (a) distributing whole files across several servers and (b) striping

files for parallel access.

More interesting are the

cases of organizing a distributed file system for very large data centers such

as those used by companies like Amazon and Google. These companies offer

services to Web clients resulting in reads and updates to a massive number of

files distributed across literally tens of thousands of computers [see also

Barroso et al. (2003)]. In such environments, the traditional assumptions

concerning distributed file systems no longer hold. For example, we can expect

that at any single moment there will be a computer malfunctioning.

To address these problems,

Google, for example, has developed its own Google file system (GFS), of which

the design is described in Ghemawat et al. (2003). Google files tend to be very

large, commonly ranging up to multiple gigabytes, where each one contains lots

of smaller objects. Moreover, updates to files usually take place by appending

data rather than overwriting parts of a file. These observations, along with

the fact that server failures are the norm rather than the exception, lead to

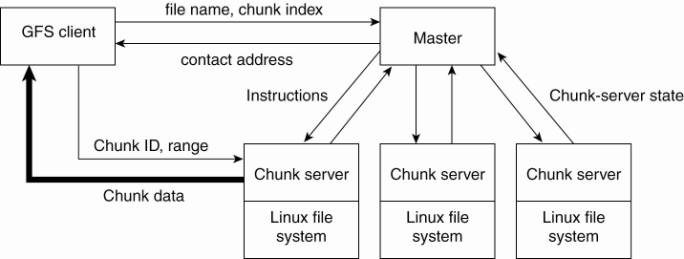

constructing clusters of servers as shown in Fig. 11-5.

Figure 11-5. The

organization of a Google cluster of servers.

Each GFS cluster consists of

a single master along with multiple chunk servers. Each GFS file is divided

into chunks of 64 Mbyte each, after which these chunks are distributed across

what are called chunk servers. An important observation is that a GFS master is

contacted only for metadata information. In particular, a GFS client passes a

file name and chunk index to the master, expecting a contact address for the

chunk. The contact address contains all the information to access the correct

chunk server to obtain the required file chunk.

[Page 498]

To this end, the GFS master

essentially maintains a name space, along with a mapping from file name to

chunks. Each chunk has an associated identifier that will allow a chunk server

to lookup it up. In addition, the master keeps track of where a chunk is

located. Chunks are replicated to handle failures, but no more than that. An

interesting feature is that the GFS master does not attempt to keep an accurate

account of chunk locations. Instead, it occasionally contacts the chunk servers

to see which chunks they have stored.

The advantage of this scheme

is simplicity. Note that the master is in control of allocating chunks to chunk

servers. In addition, the chunk servers keep an account of what they have

stored. As a consequence, once the master has obtained chunk locations, it has

an accurate picture of where data is stored. However, matters would become

complicated if this view had to be consistent all the time. For example, every

time a chunk server crashes or when a server is added, the master would need to

be informed. Instead, it is much simpler to refresh its information from the

current set of chunk servers through polling. GFS clients simply get to know

which chunk servers the master believes is storing the requested data. Because

chunks are replicated anyway, there is a high probability that a chunk is

available on at least one of the chunk servers.

Why does this scheme scale?

An important design issue is that the master is largely in control, but that it

does not form a bottleneck due to all the work it needs to do. Two important

types of measures have been taken to accommodate scalability.

First, and by far the most

important one, is that the bulk of the actual work is done by chunk servers.

When a client needs to access data, it contacts the master to find out which

chunk servers hold that data. After that, it communicates only with the chunk

servers. Chunks are replicated according to a primary-backup scheme. When the

client is performing an update operation, it contacts the nearest chunk server

holding that data, and pushes its updates to that server. This server will push

the update to the next closest one holding the data, and so on. Once all

updates have been propagated, the client will contact the primary chunk server,

who will then assign a sequence number to the update operation and pass it on

to the backups. Meanwhile, the master is kept out of the loop.

Second, the (hierarchical)

name space for files is implemented using a simple single-level table, in which

path names are mapped to metadata (such as the equivalent of inodes in

traditional file systems). Moreover, this entire table is kept in main memory,

along with the mapping of files to chunks. Updates on these data are logged to

persistent storage. When the log becomes too large, a checkpoint is made by

which the main-memory data is stored in such a way that it can be immediately

mapped back into main memory. As a consequence, the intensity of I/O of a GFS

master is strongly reduced.

[Page 499]

This organization allows a

single master to control a few hundred chunk servers, which is a considerable

size for a single cluster. By subsequently organizing a service such as Google

into smaller services that are mapped onto clusters, it is not hard to imagine

that a huge collection of clusters can be made to work together.

11.1.3. Symmetric

Architectures

Of course, fully symmetric

organizations that are based on peer-to-peer technology also exist. All current

proposals use a DHT-based system for distributing data, combined with a

key-based lookup mechanism. An important difference is whether they build a

file system on top of a distributed storage layer, or whether whole files are

stored on the participating nodes.

An example of the first type

of file system is Ivy, a distributed file system that is built using a Chord

DHT-based system. Ivy is described in Muthitacharoen et al. (2002). Their

system essentially consists of three separate layers as shown in Fig. 11-6. The

lowest layer is formed by a Chord system providing basic decentralized lookup

facilities. In the middle is a fully distributed block-oriented storage layer.

Finally, on top there is a layer implementing an NFS-like file system.

Figure 11-6. The

organization of the Ivy distributed file system.

Data storage in Ivy is

realized by a Chord-based, block-oriented distributed storage system called

DHash (Dabek et al., 2001). In essence, DHash is quite simple. It only knows

about data blocks, each block typically having a size of 8 KB. Ivy uses two kinds

of data blocks. A content-hash block has an associated key, which is computed

as the secure hash of the block's content. In this way, whenever a block is

looked up, a client can immediately verify whether the correct block has been

looked up, or that another or corrupted version is returned. Furthermore, Ivy

also makes use of public-key blocks, which are blocks having a public key as

lookup key, and whose content has been signed with the associated private key.

[Page 500]

To increase availability,

DHash replicates every block B to the k immediate successors of the server

responsible for storing B. In addition, looked up blocks are also cached along

the route that the lookup request followed.

Files are implemented as a

separate data structure on top of DHash. To achieve this goal, each user

maintains a log of operations it carries out on files. For simplicity, we

assume that there is only a single user per node so that each node will have

its own log. A log is a linked list of immutable records, where each record

contains all the information related to an operation on the Ivy file system.

Each node appends records only to its own, local, log. Only a log's head is

mutable, and points to the most recently appended record. Each record is stored

in a separate content-hash block, whereas a log's head is kept in a public-key

block.

There are different types of

records, roughly corresponding to the different operations supported by NFS.

For example, when performing an update operation on a file, a write record is created,

containing the file's identifier along with the offset for the pile pointer and

the data that is being written. Likewise, there are records for creating files

(i.e., adding a new inode), manipulating directories, etc.

To create a new file system,

a node simply creates a new log along with a new inode that will serve as the

root. Ivy deploys what is known as an NFS loopback server which is just a local

user-level server that accepts NFS requests from local clients. In the case of

Ivy, this NFS server supports mounting the newly created file system allowing

applications to access it as any other NFS file system.

When performing a read

operation, the local Ivy NFS server makes a pass over the log, collecting data

from those records that represent write operations on the same block of data,

allowing it to retrieve the most recently stored values. Note that because each

record is stored as a DHash block, multiple lookups across the overlay network

may be needed to retrieve the relevant values.

Instead of using a separate

block-oriented storage layer, alternative designs propose to distribute whole

files instead of data blocks. The developers of Kosha (Butt et al. 2004)

propose to distribute files at a specific directory level. In their approach,

each node has a mount point named /kosha containing the files that are to be

distributed using a DHT-based system. Distributing files at directory level 1

means that all files in a subdirectory /kosha/a will be stored at the same

node. Likewise, distribution at level 2 implies that all files stored in

subdirectory /kosha/a/aa are stored at the same node. Taking a level-1

distribution as an example, the node responsible for storing files under

/kosha/a is found by computing the hash of a and taking that as the key in a

lookup.

The potential drawback of

this approach is that a node may run out of disk space to store all the files

contained in the subdirectory that it is responsible for. Again, a simple

solution is found in placing a branch of that subdirectory on another node and

creating a symbolic link to where the branch is now stored.

11.2. Processes

When it comes to processes,

distributed file systems have no unusual properties. In many cases, there will

be different types of cooperating processes: storage servers and file managers,

just as we described above for the various organizations.

The most interesting aspect

concerning file system processes is whether or not they should be stateless.

NFS is a good example illustrating the trade-offs. One of its long-lasting

distinguishing features (compared to other distributed file systems), was the

fact that servers were stateless. In other words, the NFS protocol did not

require that servers maintained any client state. This approach was followed in

versions 2 and 3, but has been abandoned for version 4.

The primary advantage of the

stateless approach is simplicity. For example, when a stateless server crashes,

there is essentially no need to enter a recovery phase to bring the server to a

previous state. However, as we explained in Chap. 8, we still need to take into

account that the client cannot be given any guarantees whether or not a request

has actually been carried out.

The stateless approach in

the NFS protocol could not always be fully followed in practical

implementations. For example, locking a file cannot easily be done by a

stateless server. In the case of NFS, a separate lock manager is used to handle

this situation. Likewise, certain authentication protocols require that the

server maintains state on its clients. Nevertheless, NFS servers could

generally be designed in such a way that only very little information on

clients needed to be maintained. For the most part, the scheme worked

adequately.

Starting with version 4, the

stateless approach was abandoned, although the new protocol is designed in such

a way that a server does not need to maintain much information about its

clients. Besides those just mentioned, there are other reasons to choose for a

stateful approach. An important reason is that NFS version 4 is expected to

also work across wide-area networks. This requires that clients can make

effective use of caches, in turn requiring an efficient cache consistency

protocol. Such protocols often work best in collaboration with a server that

maintains some information on files as used by its clients. For example, a

server may associate a lease with each file it hands out to a client, promising

to give the client exclusive read and write access until the lease expires or

is refreshed. We return to such issues later in this chapter.

The most apparent difference

with the previous versions is the support for the open operation. In addition,

NFS supports callback procedures by which a server can do an RPC to a client.

Clearly, callbacks also require a server to keep track of its clients.

Similar reasoning has

affected the design of other distributed file systems. By and large, it turns

out that maintaining a fully stateless design can be quite difficult, often

leading to building stateful solutions as an enhancement, such as is the case

with NFS file locking.

11.3. Communication

As with processes, there is

nothing particularly special or unusual about communication in distributed file

systems. Many of them are based on remote procedure calls (RPCs), although some

interesting enhancements have been made to support special cases. The main reason

for choosing an RPC mechanism is to make the system independent from underlying

operating systems, networks, and transport protocols.

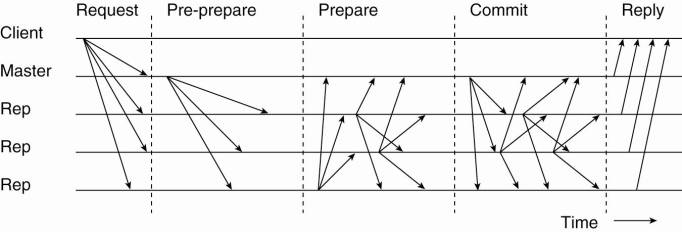

11.3.1. RPCs in NFS

For example, in NFS, all

communication between a client and server proceeds along the Open Network Computing

RPC (ONC RPC) protocol, which is formally defined in Srinivasan (1995a), along

with a standard for representing marshaled data (Srinivasan, 1995b). ONC RPC is

similar to other RPC systems as we discussed in Chap. 4.

Every NFS operation can be

implemented as a single remote procedure call to a file server. In fact, up

until NFSv4, the client was made responsible for making the server's life as

easy as possible by keeping requests relatively simple. For example, in order

to read data from a file for the first time, a client normally first had to

look up the file handle using the lookup operation, after which it could issue

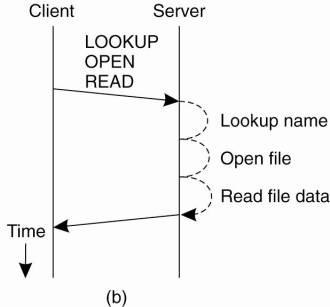

a read request, as shown in Fig. 11-7(a).

Figure 11-7. (a) Reading

data from a file in NFS version 3. (b) Reading data using a compound procedure

in version 4.

This approach required two

successive RPCs. The drawback became apparent when considering the use of NFS

in a wide-area system. In that case, the extra latency of a second RPC led to

performance degradation. To circumvent such problems, NFSv4 supports compound

procedures by which several RPCs can be grouped into a single request, as shown

in Fig. 11-7(b).

[Page 503]

In our example, the client

combines the lookup and read request into a single RPC. In the case of version

4, it is also necessary to open the file before reading can take place. After

the file handle has been looked up, it is passed to the open operation, after

which the server continues with the read operation. The overall effect in this

example is that only two messages need to be exchanged between the client and

server.

There are no transactional

semantics associated with compound procedures. The operations grouped together

in a compound procedure are simply handled in the order as requested. If there

are concurrent operations from other clients, then no measures are taken to

avoid conflicts. If an operation fails for whatever reason, then no further

operations in the compound procedure are executed, and the results found so far

are returned to the client. For example, if lookup fails, a succeeding open is

not even attempted.

11.3.2. The RPC2 Subsystem

Another interesting

enhancement to RPCs has been developed as part of the Coda file system (Kistler

and Satyanarayanan, 1992). RPC2 is a package that offers reliable RPCs on top

of the (unreliable) UDP protocol. Each time a remote procedure is called, the

RPC2 client code starts a new thread that sends an invocation request to the

server and subsequently blocks until it receives an answer. As request

processing may take an arbitrary time to complete, the server regularly sends

back messages to the client to let it know it is still working on the request.

If the server dies, sooner or later this thread will notice that the messages

have ceased and report back failure to the calling application.

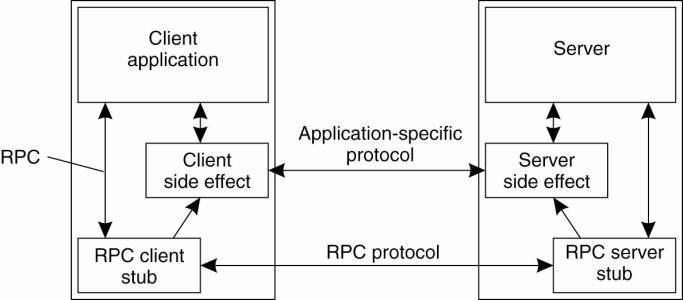

An interesting aspect of

RPC2 is its support for side effects. A side effect is a mechanism by which the

client and server can communicate using an application-specific protocol.

Consider, for example, a client opening a file at a video server. What is needed

in this case is that the client and server set up a continuous data stream with

an isochronous transmission mode. In other words, data transfer from the server

to the client is guaranteed to be within a minimum and maximum end-to-end

delay.

RPC2 allows the client and

the server to set up a separate connection for transferring the video data to

the client on time. Connection setup is done as a side effect of an RPC call to

the server. For this purpose, the RPC2 runtime system provides an interface of

side-effect routines that is to be implemented by the application developer.

For example, there are routines for setting up a connection and routines for

transferring data. These routines are automatically called by the RPC2 runtime

system at the client and server, respectively, but their implementation is

otherwise completely independent of RPC2. This principle of side effects is

shown in Fig. 11-8.

Figure 11-8. Side effects in

Coda's RPC2 system.

(This item is displayed on

page 504 in the print version)

Another feature of RPC2 that

makes it different from other RPC systems is its support for multicasting. An

important design issue in Coda is that servers keep track of which clients have

a local copy of a file. When a file is modified, a server invalidates local

copies by notifying the appropriate clients through an RPC. Clearly, if a

server can notify only one client at a time, invalidating all clients may take

some time, as illustrated in Fig. 11-9(a).

[Page 504]

Figure 11-9. (a) Sending an

invalidation message one at a time. (b) Sending invalidation messages in

parallel.

[

The problem is caused by the

fact that an RPC may occasionally fail. Invalidating files in a strict

sequential order may be delayed considerably because the server cannot reach a

possibly crashed client, but will give up on that client only after a

relatively long expiration time. Meanwhile, other clients will still be reading

from their local copies.

An alternative (and better)

solution is shown in Fig. 11-9(b). Here, instead of invalidating each copy one

by one, the server sends an invalidation message to all clients at the same

time. As a consequence, all nonfailing clients are notified in the same time as

it would take to do an immediate RPC. Also, the server notices within the usual

expiration time that certain clients are failing to respond to the RPC, and can

declare such clients as being crashed.

[Page 505]

Parallel RPCs are

implemented by means of the MultiRPC system, which is part of the RPC2 package

(Satyanarayanan and Siegel, 1990). An important aspect of MultiRPC is that the

parallel invocation of RPCs is fully transparent to the callee. In other words,

the receiver of a MultiRPC call cannot distinguish that call from a normal RPC.

At the caller's side, parallel execution is also largely transparent. For

example, the semantics of MultiRPC in the presence of failures are much the

same as that of a normal RPC. Likewise, the side-effect mechanisms can be used

in the same way as before.

MultiRPC is implemented by

essentially executing multiple RPCs in parallel. This means that the caller

explicitly sends an RPC request to each recipient. However, instead of

immediately waiting for a response, it defers blocking until all requests have

been sent. In other words, the caller invokes a number of one-way RPCs, after

which it blocks until all responses have been received from the nonfailing

recipients. An alternative approach to parallel execution of RPCs in MultiRPC

is provided by setting up a multicast group, and sending an RPC to all group

members using IP multicast.

11.3.3. File-Oriented

Communication in Plan 9

Finally, it is worth

mentioning a completely different approach to handling communication in

distributed file systems. Plan 9 (Pike et al., 1995). is not so much a

distributed file system, but rather a file-based distributed system. All

resources are accessed in the same way, namely with file-like syntax and

operations, including even resources such as processes and network interfaces.

This idea is inherited from UNIX, which also attempts to offer file-like

interfaces to resources, but it has been exploited much further and more

consistently in Plan 9. To illustrate, network interfaces are represented by a

file system, in this case consisting of a collection of special files. This

approach is similar to UNIX, although network interfaces in UNIX are

represented by files and not file systems. (Note that a file system in this

context is again the logical block device containing all the data and metadata that

comprise a collection of files.) In Plan 9, for example, an individual TCP

connection is represented by a subdirectory consisting of the files shown in

Fig. 11-10.

Figure 11-10. Files

associated with a single TCP connection in Plan 9.

(This item is displayed on

page 506 in the print version)

|

File |

Description |

|

ctl |

Used to write

protocol-specific control commands |

|

data |

Used to read and write

data |

|

listen |

Used to accept incoming

connection setup requests |

|

local |

Provides information on

the caller's side of the connection |

|

remote |

Provides information on

the other side of the connection |

|

status |

Provides diagnostic

information on the current status of the connection |

The file ctl is used to send

control commands to the connection. For example, to open a telnet session to a

machine with IP address 192.31.231.42 using port 23, requires that the sender

writes the text string "connect 192.31.231.42!23" to file ctl. The

receiver would previously have written the string "announce 23" to

its own ctl file, indicating that it can accept incoming session requests.

The data file is used to

exchange data by simply performing read and write operations. These operations

follow the usual UNIX semantics for file operations.

[Page 506]

For example, to write data

to a connection, a process simply invokes the operation

res = write(fd, buf,

nbytes);

where fd is the file

descriptor returned after opening the data file, buf is a pointer to a buffer

containing the data to be written, and nbytes is the number of bytes that

should be extracted from the buffer. The number of bytes actually written is

returned and stored in the variable res.

The file listen is used to

wait for connection setup requests. After a process has announced its

willingness to accept new connections, it can do a blocking read on file

listen. If a request comes in, the call returns a file descriptor to a new ctl

file corresponding to a newly-created connection directory. It is thus seen how

a completely file-oriented approach toward communication can be realized.

11.4. Naming

Naming arguably plays an

important role in distributed file systems. In virtually all cases, names are

organized in a hierarchical name space like those we discussed in Chap. 5. In

the following we will again consider NFS as a representative for how naming is

often handled in distributed file systems.

11.4.1. Naming in NFS

The fundamental idea

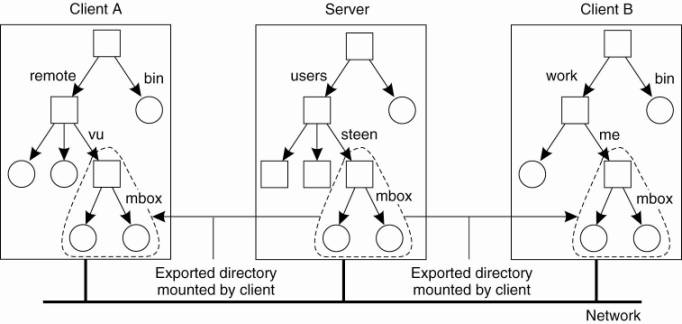

underlying the NFS naming model is to provide clients complete transparent

access to a remote file system as maintained by a server. This transparency is

achieved by letting a client be able to mount a remote file system into its own

local file system, as shown in Fig. 11-11.

Figure 11-11. Mounting (part

of) a remote file system in NFS.

(This item is displayed on

page 507 in the print version)

Instead of mounting an

entire file system, NFS allows clients to mount only part of a file system, as

also shown in Fig. 11-11. A server is said to export a directory when it makes

that directory and its entries available to clients. An exported directory can

be mounted into a client's local name space.

[Page 507]

This design approach has a

serious implication: in principle, users do not share name spaces. As shown in

Fig. 11-11, the file named /remote/vu/mbox at client A is named /work/me/mbox

at client B. A file's name therefore depends on how clients organize their own

local name space, and where exported directories are mounted. The drawback of

this approach in a distributed file system is that sharing files becomes much harder.

For example, Alice cannot tell Bob about a file using the name she assigned to

that file, for that name may have a completely different meaning in Bob's name

space of files.

There are several ways to

solve this problem, but the most common one is to provide each client with a

name space that is partly standardized. For example, each client may be using

the local directory /usr/bin to mount a file system containing a standard

collection of programs that are available to everyone. Likewise, the directory

/local may be used as a standard to mount a local file system that is located

on the client's host.

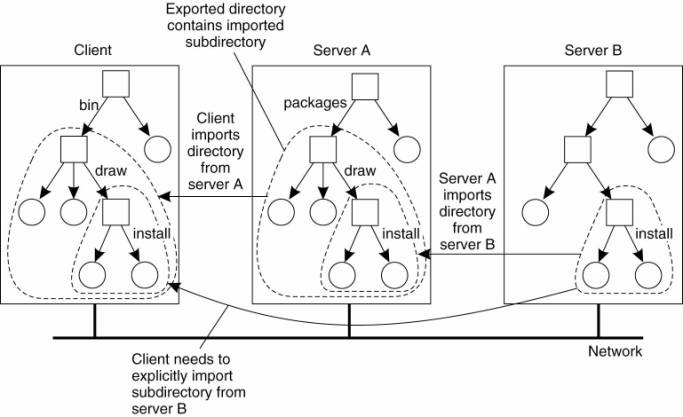

An NFS server can itself

mount directories that are exported by other servers. However, it is not

allowed to export those directories to its own clients. Instead, a client will

have to explicitly mount such a directory from the server that maintains it, as

shown in Fig. 11-12. This restriction comes partly from simplicity. If a server

could export a directory that it mounted from another server, it would have to

return special file handles that include an identifier for a server. NFS does

not support such file handles.

Figure 11-12. Mounting

nested directories from multiple servers in NFS.

(This item is displayed on

page 508 in the print version)

To explain this point in

more detail, assume that server A hosts a file system FSA from which it exports

the directory /packages. This directory contains a subdirectory /draw that acts

as a mount point for a file system FSB that is exported by server B and mounted

by A. Let A also export /packages/draw to its own clients, and assume that a

client has mounted /packages into its local directory /bin as shown in Fig.

11-12.

[Page 508]

If name resolution is

iterative (as is the case in NFSv3), then to resolve the name

/bin/draw/install, the client contacts server A when it has locally resolved

/bin and requests A to return a file handle for directory /draw. In that case,

server A should return a file handle that includes an identifier for server B,

for only B can resolve the rest of the path name, in this case /install. As we

have said, this kind of name resolution is not supported by NFS.

Name resolution in NFSv3

(and earlier versions) is strictly iterative in the sense that only a single

file name at a time can be looked up. In other words, resolving a name such as

/bin/draw/install requires three separate calls to the NFS server. Moreover,

the client is fully responsible for implementing the resolution of a path name.

NFSv4 also supports recursive name lookups. In this case, a client can pass a

complete path name to a server and request that server to resolve it.

There is another peculiarity

with NFS name lookups that has been solved with version 4. Consider a file

server hosting several file systems. With the strict iterative name resolution

in version 3, whenever a lookup was done for a directory on which another file

system was mounted, the lookup would return the file handle of the directory.

Subsequently reading that directory would return its original content, not that

of the root directory of the mounted file system.

[Page 509]

To explain, assume that in

our previous example that both file systems FSA and FSB are hosted by a single

server. If the client has mounted /packages into its local directory /bin, then

looking up the file name draw at the server would return the file handle for

draw. A subsequent call to the server for listing the directory entries of draw

by means of readdir would then return the list of directory entries that were

originally stored in FSA in subdirectory /packages/draw. Only if the client had

also mounted file system FSB, would it be possible to properly resolve the path

name draw/install relative to /bin.

NFSv4 solves this problem by

allowing lookups to cross mount points at a server. In particular, lookup

returns the file handle of the mounted directory instead of that of the

original directory. The client can detect that the lookup has crossed a mount

point by inspecting the file system identifier of the looked up file. If

required, the client can locally mount that file system as well.

File Handles

A file handle is a reference

to a file within a file system. It is independent of the name of the file it

refers to. A file handle is created by the server that is hosting the file

system and is unique with respect to all file systems exported by the server.

It is created when the file is created. The client is kept ignorant of the

actual content of a file handle; it is completely opaque. File handles were 32

bytes in NFS version 2, but were variable up to 64 bytes in version 3 and 128

bytes in version 4. Of course, the length of a file handle is not opaque.

Ideally, a file handle is

implemented as a true identifier for a file relative to a file system. For one

thing, this means that as long as the file exists, it should have one and the

same file handle. This persistence requirement allows a client to store a file

handle locally once the associated file has been looked up by means of its

name. One benefit is performance: as most file operations require a file handle

instead of a name, the client can avoid having to look up a name repeatedly

before every file operation. Another benefit of this approach is that the

client can now access the file independent of its (current) names.

Because a file handle can be

locally stored by a client, it is also important that a server does not reuse a

file handle after deleting a file. Otherwise, a client may mistakenly access

the wrong file when it uses its locally stored file handle.

Note that the combination of

iterative name lookups and not letting a lookup operation allow crossing a

mount point introduces a problem with getting an initial file handle. In order

to access files in a remote file system, a client will need to provide the

server with a file handle of the directory where the lookup should take place,

along with the name of the file or directory that is to be resolved. NFSv3

solves this problem through a separate mount protocol, by which a client

actually mounts a remote file system. After mounting, the client is passed back

the root file handle of the mounted file system, which it can subsequently use

as a starting point for looking up names.

[Page 510]

In NFSv4, this problem is

solved by providing a separate operation putrootfh that tells the server to

solve all file names relative to the root file handle of the file system it

manages. The root file handle can be used to look up any other file handle in

the server's file system. This approach has the additional benefit that there

is no need for a separate mount protocol. Instead, mounting can be integrated

into the regular protocol for looking up files. A client can simply mount a

remote file system by requesting the server to resolve names relative to the

file system's root file handle using putrootfh.

Automounting

As we mentioned, the NFS

naming model essentially provides users with their own name space. Sharing in

this model may become difficult if users name the same file differently. One

solution to this problem is to provide each user with a local name space that

is partly standardized, and subsequently mounting remote file systems the same

for each user.

Another problem with the NFS

naming model has to do with deciding when a remote file system should be

mounted. Consider a large system with thousands of users. Assume that each user

has a local directory /home that is used to mount the home directories of other

users. For example, Alice's home directory may be locally available to her as

/home/alice, although the actual files are stored on a remote server. This

directory can be automatically mounted when Alice logs into her workstation. In

addition, she may have access to Bob's public files by accessing Bob's

directory through /home/bob.

The question, however, is

whether Bob's home directory should also be mounted automatically when Alice

logs in. The benefit of this approach would be that the whole business of

mounting file systems would be transparent to Alice. However, if this policy

were followed for every user, logging in could incur a lot of communication and

administrative overhead. In addition, it would require that all users are known

in advance. A much better approach is to transparently mount another user's

home directory on demand, that is, when it is first needed.

On-demand mounting of a

remote file system (or actually an exported directory) is handled in NFS by an

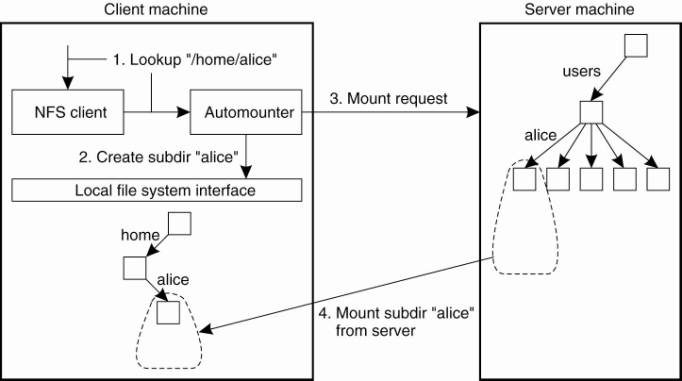

automounter, which runs as a separate process on the client's machine. The

principle underlying an automounter is relatively simple. Consider a simple

automounter implemented as a user-level NFS server on a UNIX operating system.

For alternative implementations, see Callaghan (2000).

Assume that for each user,

the home directories of all users are available through the local directory

/home, as described above. When a client machine boots, the automounter starts

with mounting this directory. The effect of this local mount is that whenever a

program attempts to access /home, the UNIX kernel will forward a lookup

operation to the NFS client, which in this case, will forward the request to

the automounter in its role as NFS server, as shown in Fig. 11-13.

Figure 11-13. A simple

automounter for NFS.

(This item is displayed on

page 511 in the print version)

[Page 511]

For example, suppose that

Alice logs in. The login program will attempt to read the directory /home/alice

to find information such as login scripts. The automounter will thus receive

the request to look up subdirectory /home/alice, for which reason it first

creates a subdirectory /alice in /home. It then looks up the NFS server that

exports Alice's home directory to subsequently mount that directory in

/home/alice. At that point, the login program can proceed.

The problem with this

approach is that the automounter will have to be involved in all file

operations to guarantee transparency. If a referenced file is not locally

available because the corresponding file system has not yet been mounted, the

automounter will have to know. In particular, it will need to handle all read

and write requests, even for file systems that have already been mounted. This

approach may incur a large performance problem. It would be better to have the

auto mounter only mount/unmount directories, but otherwise stay out of the

loop.

A simple solution is to let

the automounter mount directories in a special subdirectory, and install a

symbolic link to each mounted directory. This approach is shown in Fig. 11-14.

Figure 11-14. Using symbolic

links with automounting.

(This item is displayed on

page 512 in the print version)

In our example, the user

home directories are mounted as subdirectories of /tmp_mnt. When Alice logs in,

the automounter mounts her home directory in /tmp_mnt/home/alice and creates a

symbolic link /home/alice that refers to that subdirectory. In this case,

whenever Alice executes a command such as

ls –l /home/alice

the NFS server that exports

Alice's home directory is contacted directly without further involvement of the

automounter.

[Page 512]

11.4.2. Constructing a

Global Name Space

Large distributed systems

are commonly constructed by gluing together various legacy systems into one

whole. When it comes to offering shared access to files, having a global name

space is about the minimal glue that one would like to have. At present, file

systems are mostly opened for sharing by using primitive means such as access through

FTP. This approach, for example, is generally used in Grid computing.

More sophisticated

approaches are followed by truly wide-area distributed file systems, but these

often require modifications to operating system kernels in order to be adopted.

Therefore, researchers have been looking for approaches to integrate existing

file systems into a single, global name space but using only user-level

solutions. One such system, simply called Global Name Space Service (GNS) is

proposed by Anderson et al. (2004).

GNS does not provide

interfaces to access files. Instead, it merely provides the means to set up a

global name space in which several existing name spaces have been merged. To

this end, a GNS client maintains a virtual tree in which each node is either a

directory or a junction. A junction is a special node that indicates that name

resolution is to be taken over by another process, and as such bears some

resemblance with a mount point in traditional file system. There are five

different types of junctions, as shown in Fig. 11-15.

Figure 11-15. Junctions in

GNS.

(This item is displayed on

page 513 in the print version)

|

Junction |

Description |

|

GNS junction |

Refers to another GNS

instance |

|

Logical file-system name |

Reference to subtree to be

looked up in a location service |

|

Logical file name |

Reference to a file to be

looked up in a location service |

|

Physical file-system name |

Reference to directly

remote-accessible subtree |

|

Physical file name |

Reference to directly

remote-accessible file |

A GNS junction simply refers

to another GNS instance, which is just another virtual tree hosted at possibly

another process. The two logical junctions contain information that is needed

to contact a location service. The latter will provide the contact address for

accessing a file system and a file, respectively. A physical file-system name

refers to a file system at another server, and corresponds largely to a contact

address that a logical junction would need. For example, a URL such as

ftp://ftp.cs.vu.nl/pub would contain all the information to access files at the

indicated FTP server. Analogously, a URL such as http://www.cs.vu.nl/index.htm

is a typical example of a physical file name.

[Page 513]

Obviously, a junction should

contain all the information needed to continue name resolution. There are many

ways of doing this, but considering that there are so many different file

systems, each specific junction will require its own implementation.

Fortunately, there are also many common ways of accessing remote files,

including protocols for communicating with NFS servers, FTP servers, and

Windows-based machines (notably CIFS).

GNS has the advantage of

decoupling the naming of files from their actual location. In no way does a

virtual tree relate to where files and directories are physically placed. In

addition, by using a location service it is also possible to move files around

without rendering their names unresolvable. In that case, the new physical

location needs to be registered at the location service. Note that this is completely

the same as what we have discussed in Chap. 5.

11.5. Synchronization

Let us now continue our

discussion by focusing on synchronization issues in distributed file systems.

There are various issues that require our attention. In the first place,

synchronization for file systems would not be an issue if files were not shared.

However, in a distributed system, the semantics of file sharing becomes a bit

tricky when performance issues are at stake. To this end, different solutions

have been proposed of which we discuss the most important ones next.

11.5.1. Semantics of File

Sharing

When two or more users share

the same file at the same time, it is necessary to define the semantics of

reading and writing precisely to avoid problems. In single-processor systems

that permit processes to share files, such as UNIX, the semantics normally

state that when a read operation follows a write operation, the read returns

the value just written, as shown in Fig. 11-16(a). Similarly, when two writes

happen in quick succession, followed by a read, the value read is the value

stored by the last write. In effect, the system enforces an absolute time

ordering on all operations and always returns the most recent value. We will

refer to this model as UNIX semantics. This model is easy to understand and

straightforward to implement.

[Page 514]

Figure 11-16. (a) On a

single processor, when a read follows a write, the value returned by the read

is the value just written. (b) In a distributed system with caching, obsolete

values may be returned.

In a distributed system,

UNIX semantics can be achieved easily as long as there is only one file server

and clients do not cache files. All reads and writes go directly to the file

server, which processes them strictly sequentially. This approach gives UNIX

semantics (except for the minor problem that network delays may cause a read

that occurred a microsecond after a write to arrive at the server first and

thus gets the old value).

In practice, however, the

performance of a distributed system in which all file requests must go to a

single server is frequently poor. This problem is often solved by allowing

clients to maintain local copies of heavily-used files in their private (local)

caches. Although we will discuss the details of file caching below, for the

moment it is sufficient to point out that if a client locally modifies a cached

file and shortly thereafter another client reads the file from the server, the

second client will get an obsolete file, as illustrated in Fig. 11-16(b).

[Page 515]

One way out of this

difficulty is to propagate all changes to cached files back to the server

immediately. Although conceptually simple, this approach is inefficient. An

alternative solution is to relax the semantics of file sharing. Instead of

requiring a read to see the effects of all previous writes, one can have a new

rule that says: "Changes to an open file are initially visible only to the

process (or possibly machine) that modified the file. Only when the file is

closed are the changes made visible to other processes (or machines)." The

adoption of such a rule does not change what happens in Fig. 11-16(b), but it

does redefine the actual behavior (B getting the original value of the file) as

being the correct one. When A closes the file, it sends a copy to the server,

so that subsequent reads get the new value, as required.

This rule is

widely-implemented and is known as session semantics. Most distributed file

systems implement session semantics. This means that although in theory they

follow the remote access model of Fig. 11-1(a), most implementations make use

of local caches, effectively implementing the upload/download model of Fig.

11-1(b).

Using session semantics

raises the question of what happens if two or more clients are simultaneously

caching and modifying the same file. One solution is to say that as each file

is closed in turn, its value is sent back to the server, so the final result

depends on whose close request is most recently processed by the server. A less

pleasant, but easier to implement alternative is to say that the final result

is one of the candidates, but leave the choice of which one unspecified.

A completely different

approach to the semantics of file sharing in a distributed system is to make

all files immutable. There is thus no way to open a file for writing. In

effect, the only operations on files are create and read.

What is possible is to

create an entirely new file and enter it into the directory system under the

name of a previous existing file, which now becomes inaccessible (at least

under that name). Thus although it becomes impossible to modify the file x, it

remains possible to replace x by a new file atomically. In other words,

although files cannot be updated, directories can be. Once we have decided that

files cannot be changed at all, the problem of how to deal with two processes,

one of which is writing on a file and the other of which is reading it, just

disappears, greatly simplifying the design.

What does remain is the

problem of what happens when two processes try to replace the same file at the

same time. As with session semantics, the best solution here seems to be to

allow one of the new files to replace the old one, either the last one or

nondeterministically.

A somewhat stickier problem

is what to do if a file is replaced while another process is busy reading it.

One solution is to somehow arrange for the reader to continue using the old

file, even if it is no longer in any directory, analogous to the way UNIX

allows a process that has a file open to continue using it, even after it has

been deleted from all directories. Another solution is to detect that the file

has changed and make subsequent attempts to read from it fail.

[Page 516]

A fourth way to deal with

shared files in a distributed system is to use atom\%ic transactions. To

summarize briefly, to access a file or a group of files, a process first

executes some type of BEGIN_TRANSACTION primitive to signal that what follows

must be executed indivisibly. Then come system calls to read and write one or

more files. When the requested work has been completed, an END_TRANSACTION

primitive is executed. The key property of this method is that the system

guarantees that all the calls contained within the transaction will be carried

out in order, without any interference from other, concurrent transactions. If

two or more transactions start up at the same time, the system ensures that the

final result is the same as if they were all run in some (undefined) sequential

order.

In Fig. 11-17 we summarize

the four approaches we have discussed for dealing with shared files in a

distributed system.

Figure 11-17. Four ways of

dealing with the shared files in a distributed system.

|

Method |

Comment |

|

UNIX semantics |

Every operation on a file

is instantly visible to all processes |

|

Session semantics |

No changes are visible to

other processes until the file is closed |

|

Immutable files |

No updates are possible;

simplifies sharing and replication |

|

Transactions |

All changes occur

atomically |

11.5.2. File Locking

Notably in client-server

architectures with stateless servers, we need additional facilities for

synchronizing access to shared files. The traditional way of doing this is to

make use of a lock manager. Without exception, a lock manager follows the

centralized locking scheme as we discussed in Chap. 6.

However, matters are not as

simple as we just sketched. Although a central lock manager is generally

deployed, the complexity in locking comes from the need to allow concurrent

access to the same file. For this reason, a great number of different locks

exist, and moreover, the granularity of locks may also differ. Let us consider

NFSv4 again.

Conceptually, file locking

in NFSv4 is simple. There are essentially only four operations related to

locking, as shown in Fig. 11-18. NFSv4 distinguishes read locks from write

locks. Multiple clients can simultaneously access the same part of a file

provided they only read data. A write lock is needed to obtain exclusive access

to modify part of a file.

Figure 11-18. NFSv4

operations related to file locking.

(This item is displayed on

page 517 in the print version)

|

Operation |

Description |

|

Lock |

Create a lock for a range

of bytes |

|

Lockt |

Test whether a conflicting

lock has been granted |

|

Locku |

Remove a lock from a range

of bytes |

|

Renew |

Renew the lease on a

specified lock |

Operation lock is used to

request a read or write lock on a consecutive range of bytes in a file. It is a

nonblocking operation; if the lock cannot be granted due to another conflicting

lock, the client gets back an error message and has to poll the server at a

later time. There is no automatic retry. Alternatively, the client can request

to be put on a FIFO-ordered list maintained by the server. As soon as the conflicting

lock has been removed, the server will grant the next lock to the client at the

top of the list, provided it polls the server before a certain time expires.

This approach prevents the server from having to notify clients, while still

being fair to clients whose lock request could not be granted because grants

are made in FIFO order.

[Page 517]

The lockt operation is used

to test whether a conflicting lock exists. For example, a client can test

whether there are any read locks granted on a specific range of bytes in a

file, before requesting a write lock for those bytes. In the case of a conflict,

the requesting client is informed exactly who is causing the conflict and on

which range of bytes. It can be implemented more efficiently than lock, because

there is no need to attempt to open a file.

Removing a lock from a file

is done by means of the locku operation.

Locks are granted for a

specific time (determined by the server). In other words, they have an

associated lease. Unless a client renews the lease on a lock it has been

granted, the server will automatically remove it. This approach is followed for

other server-provided resources as well and helps in recovery after failures.

Using the renew operation, a client requests the server to renew the lease on

its lock (and, in fact, other resources as well).

In addition to these

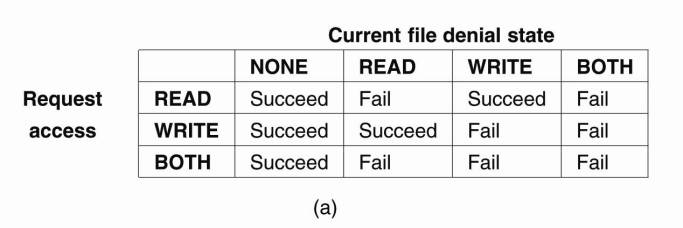

operations, there is also an implicit way to lock a file, referred to as share

reservation. Share reservation is completely independent from locking, and can

be used to implement NFS for Windows-based systems. When a client opens a file,

it specifies the type of access it requires (namely READ, WRITE, or BOTH), and

which type of access the server should deny other clients (NONE, READ, WRITE,

or BOTH). If the server cannot meet the client's requirements, the open

operation will fail for that client. In Fig. 11-19 we show exactly what happens

when a new client opens a file that has already been successfully opened by

another client. For an already opened file, we distinguish two different state

variables. The access state specifies how the file is currently being accessed

by the current client. The denial state specifies what accesses by new clients

are not permitted.

Figure 11-19. The result of

an open operation with share reservations in NFS. (a) When the client requests

shared access given the current denial state. (b) When the client requests a

denial state given the current file access state.

(This item is displayed on

page 518 in the print version)

In Fig. 11-19(a), we show

what happens when a client tries to open a file requesting a specific type of

access, given the current denial state of that file. Likewise, Fig. 11-19(b)

shows the result of opening a file that is currently being accessed by another

client, but now requesting certain access types to be disallowed.

[Page 518]

NFSv4 is by no means an

exception when it comes to offering synchronization mechanisms for shared

files. In fact, it is by now accepted that any simple set of primitives such as

only complete-file locking, reflects poor design. Complexity in locking schemes

comes mostly from the fact that a fine granularity of locking is required to

allow for concurrent access to shared files. Some attempts to reduce complexity

while keeping performance have been taken [see, e.g., Burns et al. (2001)], but

the situation remains somewhat unsatisfactory. In the end, we may be looking at

completely redesigning our applications for scalability rather than trying to

patch situations that come from wanting to share data the way we did in

nondistributed systems.

11.5.3. Sharing Files in

Coda

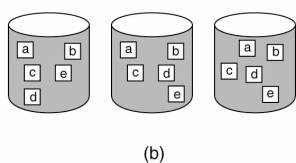

The session semantics in NFS

dictate that the last process that closes a file will have its changes

propagated to the server; any updates in concurrent, but earlier sessions will

be lost. A somewhat more subtle approach can also be taken. To accommodate file

sharing, the Coda file system (Kistler and Satyanaryanan, 1992) uses a special

allocation scheme that bears some similarities to share reservations in NFS. To

understand how the scheme works, the following is important. When a client

successfully opens a file f, an entire copy of f is transferred to the client's

machine. The server records that the client has a copy of f. So far, this

approach is similar to open delegation in NFS.

[Page 519]

Now suppose client A has

opened file f for writing. When another client B wants to open f as well, it

will fail. This failure is caused by the fact that the server has recorded that

client A might have already modified f. On the other hand, had client A opened

f for reading, an attempt by client B to get a copy from the server for reading

would succeed. An attempt by B to open for writing would succeed as well.

Now consider what happens

when several copies of f have been stored locally at various clients. Given

what we have just said, only one client will be able to modify f. If this

client modifies f and subsequently closes the file, the file will be

transferred back to the server. However, every other client may proceed to read

its local copy despite the fact that the copy is actually outdated.

The reason for this

apparently inconsistent behavior is that a session is treated as a transaction

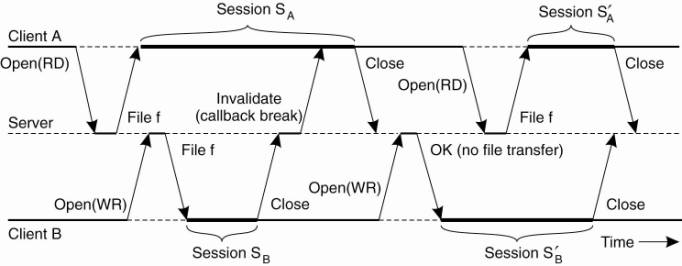

in Coda. Consider Fig. 11-20, which shows the time line for two processes, A

and B. Assume A has opened f for reading, leading to session SA. Client B has opened

f for writing, shown as session SB.

Figure 11-20. The

transactional behavior in sharing files in Coda.

When B closes session SB, it

transfers the updated version of f to the server, which will then send an

invalidation message to A. A will now know that it is reading from an older

version of f. However, from a transactional point of view, this really does not

matter because session SA could be considered to have been scheduled before

session SB.

11.6. Consistency and

Replication

Caching and replication play

an important role in distributed file systems, most notably when they are

designed to operate over wide-area networks. In what follows, we will take a

look at various aspects related to client-side caching of file data, as well as

the replication of file servers. Also, we consider the role of replication in

peer-to-peer file-sharing systems.

[Page 520]

11.6.1. Client-Side Caching

To see how client-side

caching is deployed in practice, we return to our example systems NFS and Coda.

Caching in NFS

Caching in NFSv3 has been

mainly left outside of the protocol. This approach has led to the

implementation of different caching policies, most of which never guaranteed

consistency. At best, cached data could be stale for a few seconds compared to

the data stored at a server. However, implementations also exist that allowed

cached data to be stale for 30 seconds without the client knowing. This state

of affairs is less than desirable.

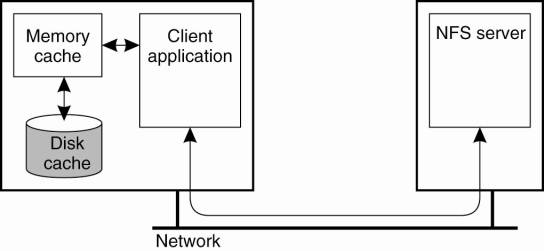

NFSv4 solves some of these

consistency problems, but essentially still leaves cache consistency to be

handled in an implementation-dependent way. The general caching model that is

assumed by NFS is shown in Fig. 11-21. Each client can have a memory cache that

contains data previously read from the server. In addition, there may also be a

disk cache that is added as an extension to the memory cache, using the same

consistency parameters.

Figure 11-21. Client-side

caching in NFS.

Typically, clients cache

file data, attributes, file handles, and directories. Different strategies

exist to handle consistency of the cached data, cached attributes, and so on.

Let us first take a look at caching file data.

NFSv4 supports two different

approaches for caching file data. The simplest approach is when a client opens

a file and caches the data it obtains from the server as the result of various

read operations. In addition, write operations can be carried out in the cache

as well. When the client closes the file, NFS requires that if modifications

have taken place, the cached data must be flushed back to the server. This

approach corresponds to implementing session semantics as discussed earlier.

[Page 521]

Once (part of) a file has

been cached, a client can keep its data in the cache even after closing the

file. Also, several clients on the same machine can share a single cache. NFS

requires that whenever a client opens a previously closed file that has been

(partly) cached, the client must immediately revalidate the cached data. Revalidation

takes place by checking when the file was last modified and invalidating the

cache in case it contains stale data.

In NFSv4 a server may

delegate some of its rights to a client when a file is opened. Open delegation

takes place when the client machine is allowed to locally handle open and close

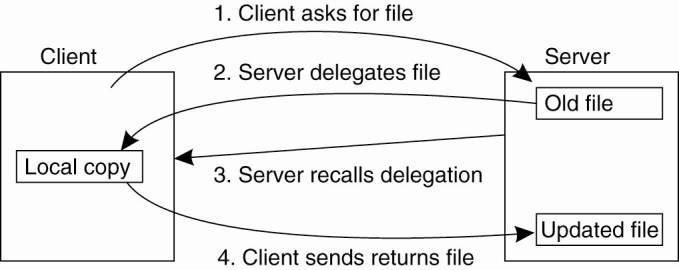

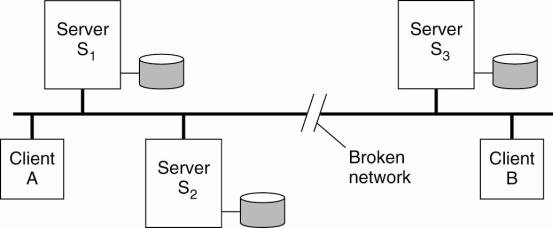

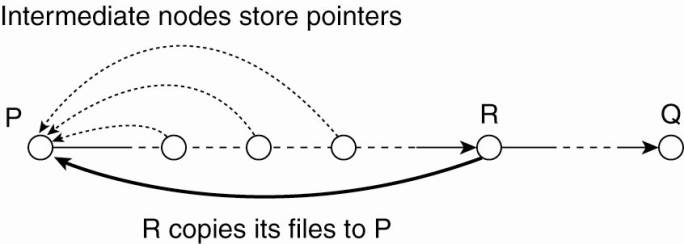

operations from other clients on the same machine. Normally, the server is in