Chapter 4. Communication

Interprocess communication

is at the heart of all distributed systems. It makes no sense to study

distributed systems without carefully examining the ways that processes on

different machines can exchange information. Communication in distributed

systems is always based on low-level message passing as offered by the

underlying network. Expressing communication through message passing is harder

than using primitives based on shared memory, as available for nondistributed

platforms. Modern distributed systems often consist of thousands or even

millions of processes scattered across a network with unreliable communication

such as the Internet. Unless the primitive communication facilities of computer

networks are replaced by something else, development of large-scale distributed

applications is extremely difficult.

In this chapter, we start by

discussing the rules that communicating processes must adhere to, known as

protocols, and concentrate on structuring those protocols in the form of

layers. We then look at three widely-used models for communication: Remote

Procedure Call (RPC), Message-Oriented Middleware (MOM), and data streaming. We

also discuss the general problem of sending data to multiple receivers, called

multicasting.

Our first model for

communication in distributed systems is the remote procedure call (RPC). An RPC

aims at hiding most of the intricacies of message passing, and is ideal for

client-server applications.

In many distributed applications,

communication does not follow the rather strict pattern of client-server

interaction. In those cases, it turns out that thinking in terms of messages is

more appropriate. However, the low-level communication facilities of computer

networks are in many ways not suitable due to their lack of distribution

transparency. An alternative is to use a high-level message-queuing model, in

which communication proceeds much the same as in electronic mail systems.

Message-oriented middleware (MOM) is a subject important enough to warrant a

section of its own.

[Page 116]

With the advent of

multimedia distributed systems, it became apparent that many systems were

lacking support for communication of continuous media, such as audio and video.

What is needed is the notion of a stream that can support the continuous flow

of messages, subject to various timing constraints. Streams are discussed in a

separate section.

Finally, since our

understanding of setting up multicast facilities has improved, novel and elegant

solutions for data dissemination have emerged. We pay separate attention to

this subject in the last section of this chapter.

4.1. Fundamentals

Before we start our

discussion on communication in distributed systems, we first recapitulate some

of the fundamental issues related to communication. In the next section we

briefly discuss network communication protocols, as these form the basis for

any distributed system. After that, we take a different approach by classifying

the different types of communication that occurs in distributed systems.

4.1.1. Layered Protocols

Due to the absence of shared

memory, all communication in distributed systems is based on sending and

receiving (low level) messages. When process A wants to communicate with

process B, it first builds a message in its own address space. Then it executes

a system call that causes the operating system to send the message over the

network to B. Although this basic idea sounds simple enough, in order to

prevent chaos, A and B have to agree on the meaning of the bits being sent. If

A sends a brilliant new novel written in French and encoded in IBM's EBCDIC

character code, and B expects the inventory of a supermarket written in English

and encoded in ASCII, communication will be less than optimal.

Many different agreements

are needed. How many volts should be used to signal a 0-bit, and how many volts

for a 1-bit? How does the receiver know which is the last bit of the message?

How can it detect if a message has been damaged or lost, and what should it do

if it finds out? How long are numbers, strings, and other data items, and how

are they represented? In short, agreements are needed at a variety of levels,

varying from the low-level details of bit transmission to the high-level

details of how information is to be expressed.

[Page 117]

To make it easier to deal

with the numerous levels and issues involved in communication, the

International Standards Organization (ISO) developed a reference model that

clearly identifies the various levels involved, gives them standard names, and

points out which level should do which job. This model is called the Open

Systems Interconnection Reference Model (Day and Zimmerman, 1983), usually

abbreviated as ISO OSI or sometimes just the OSI model. It should be emphasized

that the protocols that were developed as part of the OSI model were never

widely used and are essentially dead now. However, the underlying model itself

has proved to be quite useful for understanding computer networks. Although we

do not intend to give a full description of this model and all of its

implications here, a short introduction will be helpful. For more details, see

Tanen-baum (2003).

The OSI model is designed to

allow open systems to communicate. An open system is one that is prepared to

communicate with any other open system by using standard rules that govern the

format, contents, and meaning of the messages sent and received. These rules

are formalized in what are called protocols. To allow a group of computers to

communicate over a network, they must all agree on the protocols to be used. A

distinction is made between two general types of protocols. With connection

oriented protocols, before exchanging data the sender and receiver first

explicitly establish a connection, and possibly negotiate the protocol they

will use. When they are done, they must release (terminate) the connection. The

telephone is a connection-oriented communication system. With connectionless

protocols, no setup in advance is needed. The sender just transmits the first

message when it is ready. Dropping a letter in a mailbox is an example of

connectionless communication. With computers, both connection-oriented and

connectionless communication are common.

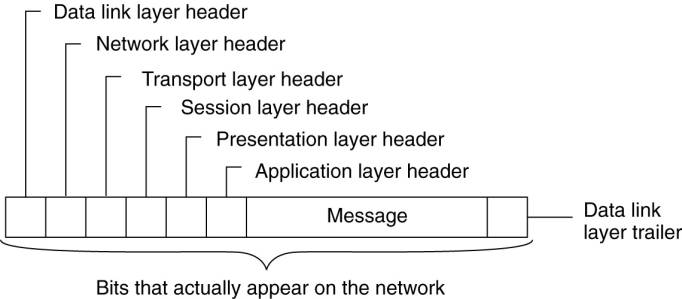

In the OSI model,

communication is divided up into seven levels or layers, as shown in Fig. 4-1.

Each layer deals with one specific aspect of the communication. In this way,

the problem can be divided up into manageable pieces, each of which can be

solved independent of the others. Each layer provides an interface to the one

above it. The interface consists of a set of operations that together define

the service the layer is prepared to offer its users.

Figure 4-1. Layers,

interfaces, and protocols in the OSI model.

(This item is displayed on

page 118 in the print version)

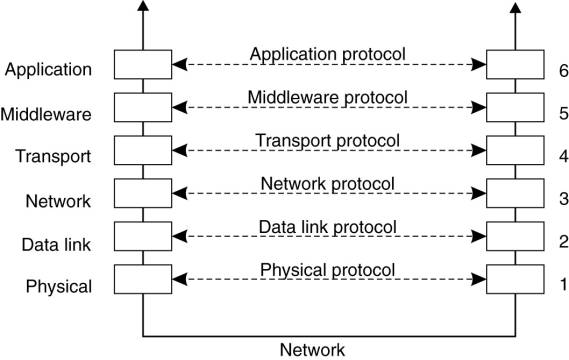

When process A on machine 1

wants to communicate with process B on machine 2, it builds a message and

passes the message to the application layer on its machine. This layer might be

a library procedure, for example, but it could also be implemented in some

other way (e.g., inside the operating system, on an external network processor,

etc.). The application layer software then adds a header to the front of the

message and passes the resulting message across the layer 6/7 interface to the

presentation layer. The presentation layer in turn adds its own header and

passes the result down to the session layer, and so on. Some layers add not

only a header to the front, but also a trailer to the end. When it hits the

bottom, the physical layer actually transmits the message (which by now might

look as shown in Fig. 4-2) by putting it onto the physical transmission medium.

[Page 118]

Figure 4-2. A typical

message as it appears on the network.

When the message arrives at

machine 2, it is passed upward, with each layer stripping off and examining its

own header. Finally, the message arrives at the receiver, process B, which may

reply to it using the reverse path. The information in the layer n header is

used for the layer n protocol.

As an example of why layered

protocols are important, consider communication between two companies, Zippy

Airlines and its caterer, Mushy Meals, Inc. Every month, the head of passenger

service at Zippy asks her secretary to contact the sales manager's secretary at

Mushy to order 100,000 boxes of rubber chicken. Traditionally, the orders went

via the post office. However, as the postal service deteriorated, at some point

the two secretaries decided to abandon it and communicate by e-mail. They could

do this without bothering their bosses, since their protocol deals with the

physical transmission of the orders, not their contents.

[Page 119]

Similarly, the head of

passenger service can decide to drop the rubber chicken and go for Mushy's new

special, prime rib of goat, without that decision affecting the secretaries.

The thing to notice is that we have two layers here, the bosses and the

secretaries. Each layer has its own protocol (subjects of discussion and technology)

that can be changed independently of the other one. It is precisely this

independence that makes layered protocols attractive. Each one can be changed

as technology improves, without the other ones being affected.

In the OSI model, there are

not two layers, but seven, as we saw in Fig. 4-1. The collection of protocols

used in a particular system is called a protocol suite or protocol stack. It is

important to distinguish a reference model from its actual protocols. As we

mentioned, the OSI protocols were never popular. In contrast, protocols

developed for the Internet, such as TCP and IP, are mostly used. In the

following sections, we will briefly examine each of the OSI layers in turn,

starting at the bottom. However, instead of giving examples of OSI protocols,

where appropriate, we will point out some of the Internet protocols used in

each layer.

Lower-Level Protocols

We start with discussing the

three lowest layers of the OSI protocol suite. Together, these layers implement

the basic functions that encompass a computer network.

The physical layer is

concerned with transmitting the 0s and 1s. How many volts to use for 0 and 1,

how many bits per second can be sent, and whether transmission can take place

in both directions simultaneously are key issues in the physical layer. In

addition, the size and shape of the network connector (plug), as well as the

number of pins and meaning of each are of concern here.

The physical layer protocol

deals with standardizing the electrical, mechanical, and signaling interfaces

so that when one machine sends a 0 bit it is actually received as a 0 bit and

not a 1 bit. Many physical layer standards have been developed (for different

media), for example, the RS-232-C standard for serial communication lines.

The physical layer just

sends bits. As long as no errors occur, all is well. However, real

communication networks are subject to errors, so some mechanism is needed to

detect and correct them. This mechanism is the main task of the data link

layer. What it does is to group the bits into units, sometimes called frames,

and see that each frame is correctly received.

The data link layer does its

work by putting a special bit pattern on the start and end of each frame to

mark them, as well as computing a checksum by adding up all the bytes in the

frame in a certain way. The data link layer appends the checksum to the frame.

When the frame arrives, the receiver recomputes the checksum from the data and

compares the result to the checksum following the frame. If the two agree, the

frame is considered correct and is accepted. It they disagree, the receiver

asks the sender to retransmit it. Frames are assigned sequence numbers (in the

header), so everyone can tell which is which.

[Page 120]

On a LAN, there is usually no

need for the sender to locate the receiver. It just puts the message out on the

network and the receiver takes it off. A wide-area network, however, consists

of a large number of machines, each with some number of lines to other

machines, rather like a large-scale map showing major cities and roads

connecting them. For a message to get from the sender to the receiver it may

have to make a number of hops, at each one choosing an outgoing line to use.

The question of how to choose the best path is called routing, and is

essentially the primary task of the network layer.

The problem is complicated

by the fact that the shortest route is not always the best route. What really

matters is the amount of delay on a given route, which, in turn, is related to

the amount of traffic and the number of messages queued up for transmission

over the various lines. The delay can thus change over the course of time. Some

routing algorithms try to adapt to changing loads, whereas others are content

to make decisions based on long-term averages.

At present, the most widely

used network protocol is the connectionless IP (Internet Protocol), which is

part of the Internet protocol suite. An IP packet (the technical term for a

message in the network layer) can be sent without any setup. Each IP packet is

routed to its destination independent of all others. No internal path is

selected and remembered.

Transport Protocols

The transport layer forms

the last part of what could be called a basic network protocol stack, in the

sense that it implements all those services that are not provided at the

interface of the network layer, but which are reasonably needed to build

network applications. In other words, the transport layer turns the underlying

network into something that an application developer can use.

Packets can be lost on the

way from the sender to the receiver. Although some applications can handle

their own error recovery, others prefer a reliable connection. The job of the

transport layer is to provide this service. The idea is that the application

layer should be able to deliver a message to the transport layer with the

expectation that it will be delivered without loss.

Upon receiving a message

from the application layer, the transport layer breaks it into pieces small

enough for transmission, assigns each one a sequence number, and then sends

them all. The discussion in the transport layer header concerns which packets

have been sent, which have been received, how many more the receiver has room

to accept, which should be retransmitted, and similar topics.

Reliable transport

connections (which by definition are connection oriented) can be built on top

of connection-oriented or connectionless network services. In the former case

all the packets will arrive in the correct sequence (if they arrive at all),

but in the latter case it is possible for one packet to take a different route

and arrive earlier than the packet sent before it. It is up to the transport

layer software to put everything back in order to maintain the illusion that a

transport connection is like a big tube—you put messages into it and they come

out undamaged and in the same order in which they went in. Providing this

end-to-end communication behavior is an important aspect of the transport

layer.

[Page 121]

The Internet transport

protocol is called TCP (Transmission Control Protocol) and is described in

detail in Comer (2006). The combination TCP/IP is now used as a de facto

standard for network communication. The Internet protocol suite also supports a

connectionless transport protocol called UDP (Universal Datagram Protocol),

which is essentially just IP with some minor additions. User programs that do

not need a connection-oriented protocol normally use UDP.

Additional transport

protocols are regularly proposed. For example, to support real-time data

transfer, the Real-time Transport Protocol (RTP) has been defined. RTP is a

framework protocol in the sense that it specifies packet formats for real-time

data without providing the actual mechanisms for guaranteeing data delivery. In

addition, it specifies a protocol for monitoring and controlling data transfer

of RTP packets (Schulzrinne et al., 2003).

Higher-Level Protocols

Above the transport layer,

OSI distinguished three additional layers. In practice, only the application

layer is ever used. In fact, in the Internet protocol suite, everything above

the transport layer is grouped together. In the face of middle-ware systems, we

shall see in this section that neither the OSI nor the Internet approach is really

appropriate.

The session layer is

essentially an enhanced version of the transport layer. It provides dialog

control, to keep track of which party is currently talking, and it provides

synchronization facilities. The latter are useful to allow users to insert

checkpoints into long transfers, so that in the event of a crash, it is

necessary to go back only to the last checkpoint, rather than all the way back

to the beginning. In practice, few applications are interested in the session

layer and it is rarely supported. It is not even present in the Internet

protocol suite. However, in the context of developing middleware solutions, the

concept of a session and its related protocols has turned out to be quite

relevant, notably when defining higher-level communication protocols.

Unlike the lower layers,

which are concerned with getting the bits from the sender to the receiver

reliably and efficiently, the presentation layer is concerned with the meaning

of the bits. Most messages do not consist of random bit strings, but more

structured information such as people's names, addresses, amounts of money, and

so on. In the presentation layer it is possible to define records containing

fields like these and then have the sender notify the receiver that a message contains

a particular record in a certain format. This makes it easier for machines with

different internal representations to communicate with each other.

[Page 122]

The OSI application layer was

originally intended to contain a collection of standard network applications

such as those for electronic mail, file transfer, and terminal emulation. By

now, it has become the container for all applications and protocols that in one

way or the other do not fit into one of the underlying layers. From the

perspective of the OSI reference model, virtually all distributed systems are

just applications.

What is missing in this

model is a clear distinction between applications, application-specific

protocols, and general-purpose protocols. For example, the Internet File

Transfer Protocol (FTP) (Postel and Reynolds, 1985; and Horowitz and Lunt,

1997) defines a protocol for transferring files between a client and server

machine. The protocol should not be confused with the ftp program, which is an

end-user application for transferring files and which also (not entirely by

coincidence) happens to implement the Internet FTP.

Another example of a typical

application-specific protocol is the HyperText Transfer Protocol (HTTP)

(Fielding et al., 1999), which is designed to remotely manage and handle the

transfer of Web pages. The protocol is implemented by applications such as Web

browsers and Web servers. However, HTTP is now also used by systems that are

not intrinsically tied to the Web. For example, Java's object-invocation

mechanism uses HTTP to request the invocation of remote objects that are

protected by a firewall (Sun Microsystems, 2004b).

There are also many

general-purpose protocols that are useful to many applications, but which

cannot be qualified as transport protocols. In many cases, such protocols fall

into the category of middleware protocols, which we discuss next.

Middleware Protocols

Middleware is an application

that logically lives (mostly) in the application layer, but which contains many

general-purpose protocols that warrant their own layers, independent of other,

more specific applications. A distinction can be made between high-level

communication protocols and protocols for establishing various middleware

services.

There are numerous protocols

to support a variety of middleware services. For example, as we discuss in

Chap. 9, there are various ways to establish authentication, that is, provide

proof of a claimed identity. Authentication protocols are not closely tied to

any specific application, but instead, can be integrated into a middleware

system as a general service. Likewise, authorization protocols by which

authenticated users and processes are granted access only to those resources for

which they have authorization, tend to have a general, application-independent

nature.

As another example, we shall

consider a number of distributed commit protocols in Chap. 8. Commit protocols

establish that in a group of processes either all processes carry out a

particular operation, or that the operation is not carried out at all. This

phenomenon is also referred to as atomicity and is widely applied in

transactions. As we shall see, besides transactions, other applications, like

fault-tolerant ones, can also take advantage of distributed commit protocols.

[Page 123]

As a last example, consider

a distributed locking protocol by which a resource can be protected against

simultaneous access by a collection of processes that are distributed across

multiple machines. We shall come across a number of such protocols in Chap. 6.

Again, this is an example of a protocol that can be used to implement a general

middleware service, but which, at the same time, is highly independent of any

specific application.

Middleware communication

protocols support high-level communication services. For example, in the next

two sections we shall discuss protocols that allow a process to call a

procedure or invoke an object on a remote machine in a highly transparent way.

Likewise, there are high-level communication services for setting and

synchronizing streams for transferring real-time data, such as needed for

multimedia applications. As a last example, some middleware systems offer

reliable multicast services that scale to thousands of receivers spread across

a wide-area network.

Some of the middleware

communication protocols could equally well belong in the transport layer, but

there may be specific reasons to keep them at a higher level. For example,

reliable multicasting services that guarantee scalability can be implemented

only if application requirements are taken into account. Consequently, a

middleware system may offer different (tunable) protocols, each in turn

implemented using different transport protocols, but offering a single

interface.

Taking this approach to

layering leads to a slightly adapted reference model for communication, as

shown in Fig. 4-3. Compared to the OSI model, the session and presentation

layer have been replaced by a single middleware layer that contains

application-independent protocols. These protocols do not belong in the lower

layers we just discussed. The original transport services may also be offered

as a middleware service, without being modified. This approach is somewhat

analogous to offering UDP at the transport level. Likewise, middleware

communication services may include message-passing services comparable to those

offered by the transport layer.

[Page 124]

Figure 4-3. An adapted

reference model for networked communication.

(This item is displayed on

page 123 in the print version)

In the remainder of this

chapter, we concentrate on four high-level middle-ware communication services:

remote procedure calls, message queuing services, support for communication of continuous

media through streams, and multicasting. Before doing so, there are other

general criteria for distinguishing (middleware) communication which we discuss

next.

4.1.2. Types of

Communication

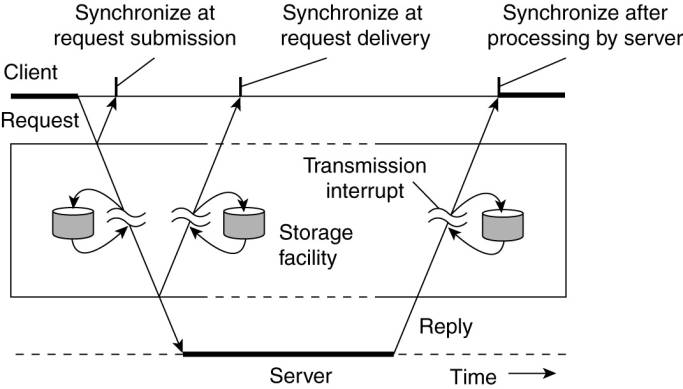

To understand the various

alternatives in communication that middleware can offer to applications, we

view the middleware as an additional service in client-server computing, as

shown in Fig. 4-4. Consider, for example an electronic mail system. In

principle, the core of the mail delivery system can be seen as a middleware

communication service. Each host runs a user agent allowing users to compose,

send, and receive e-mail. A sending user agent passes such mail to the mail

delivery system, expecting it, in turn, to eventually deliver the mail to the

intended recipient. Likewise, the user agent at the receiver's side connects to

the mail delivery system to see whether any mail has come in. If so, the

messages are transferred to the user agent so that they can be displayed and

read by the user.

Figure 4-4. Viewing

middleware as an intermediate (distributed) service in application-level

communication.

An electronic mail system is

a typical example in which communication is persistent. With persistent

communication, a message that has been submitted for transmission is stored by

the communication middleware as long as it takes to deliver it to the receiver.

In this case, the middleware will store the message at one or several of the

storage facilities shown in Fig. 4-4. As a consequence, it is not necessary for

the sending application to continue execution after submitting the message.

Likewise, the receiving application need not be executing when the message is

submitted.

[Page 125]

In contrast, with transient

communication, a message is stored by the communication system only as long as

the sending and receiving application are executing. More precisely, in terms

of Fig. 4-4, the middleware cannot deliver a message due to a transmission

interrupt, or because the recipient is currently not active, it will simply be

discarded. Typically, all transport-level communication services offer only

transient communication. In this case, the communication system consists

traditional store-and-forward routers. If a router cannot deliver a message to

the next one or the destination host, it will simply drop the message.

Besides being persistent or

transient, communication can also be asynchronous or synchronous. The

characteristic feature of asynchronous communication is that a sender continues

immediately after it has submitted its message for transmission. This means

that the message is (temporarily) stored immediately by the middleware upon

submission. With synchronous communication, the sender is blocked until its

request is known to be accepted. There are essentially three points where

synchronization can take place. First, the sender may be blocked until the

middleware notifies that it will take over transmission of the request. Second,

the sender may synchronize until its request has been delivered to the intended

recipient. Third, synchronization may take place by letting the sender wait

until its request has been fully processed, that is, up the time that the

recipient returns a response.

Various combinations of

persistence and synchronization occur in practice. Popular ones are persistence

in combination with synchronization at request submission, which is a common

scheme for many message-queuing systems, which we discuss later in this

chapter. Likewise, transient communication with synchronization after the request

has been fully processed is also widely used. This scheme corresponds with

remote procedure calls, which we also discuss below.

Besides persistence and

synchronization, we should also make a distinction between discrete and

streaming communication. The examples so far all fall in the category of

discrete communication: the parties communicate by messages, each message

forming a complete unit of information. In contrast, streaming involves sending

multiple messages, one after the other, where the messages are related to each

other by the order they are sent, or because there is a temporal relationship.

We return to streaming communication extensively below.

4.2. Remote Procedure

Call

Many distributed systems have

been based on explicit message exchange between processes. However, the

procedures send and receive do not conceal communication at all, which is

important to achieve access transparency in distributed systems. This problem

has long been known, but little was done about it until a paper by Birrell and

Nelson (1984) introduced a completely different way of handling communication.

Although the idea is refreshingly simple (once someone has thought of it), the

implications are often subtle. In this section we will examine the concept, its

implementation, its strengths, and its weaknesses.

[Page 126]

In a nutshell, what Birrell

and Nelson suggested was allowing programs to call procedures located on other

machines. When a process on machine A calls a procedure on machine B, the

calling process on A is suspended, and execution of the called procedure takes

place on B. Information can be transported from the caller to the callee in the

parameters and can come back in the procedure result. No message passing at all

is visible to the programmer. This method is known as Remote Procedure Call, or

often just RPC.

While the basic idea sounds

simple and elegant, subtle problems exist. To start with, because the calling

and called procedures run on different machines, they execute in different

address spaces, which causes complications. Parameters and results also have to

be passed, which can be complicated, especially if the machines are not

identical. Finally, either or both machines can crash and each of the possible

failures causes different problems. Still, most of these can be dealt with, and

RPC is a widely-used technique that underlies many distributed systems.

4.2.1. Basic RPC

Operation

We first start with

discussing conventional procedure calls, and then explain how the call itself

can be split into a client and server part that are each executed on different

machines.

Conventional Procedure Call

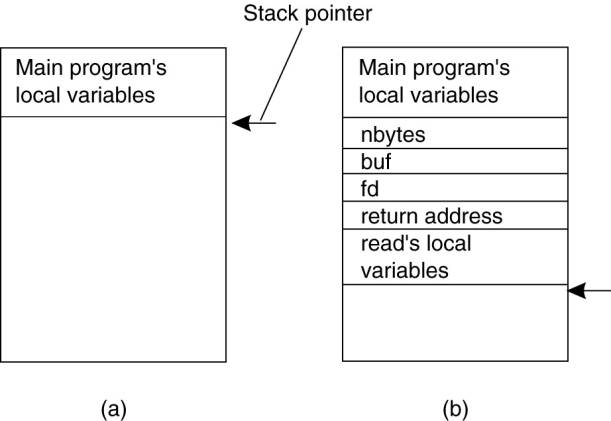

To understand how RPC works,

it is important first to fully understand how a conventional (i.e., single

machine) procedure call works. Consider a call in C like

count = read(fd, buf,

nbytes);

where fd is an integer

indicating a file, buf is an array of characters into which data are read, and

nbytes is another integer telling how many bytes to read. If the call is made

from the main program, the stack will be as shown in Fig. 4-5(a) before the

call. To make the call, the caller pushes the parameters onto the stack in

order, last one first, as shown in Fig. 4-5(b). (The reason that C compilers

push the parameters in reverse order has to do with printf—by doing so, printf

can always locate its first parameter, the format string.) After the read

procedure has finished running, it puts the return value in a register, removes

the return address, and transfers control back to the caller. The caller then

removes the parameters from the stack, returning the stack to the original

state it had before the call.

[Page 127]

Figure 4-5. (a) Parameter

passing in a local procedure call: the stack before the call to read. (b) The

stack while the called procedure is active.

Several things are worth

noting. For one, in C, parameters can be call-by-value or call-by-reference. A

value parameter, such as fd or nbytes, is simply copied to the stack as shown

in Fig. 4-5(b). To the called procedure, a value parameter is just an

initialized local variable. The called procedure may modify it, but such

changes do not affect the original value at the calling side.

A reference parameter in C is

a pointer to a variable (i.e., the address of the variable), rather than the

value of the variable. In the call to read, the second parameter is a reference

parameter because arrays are always passed by reference in C. What is actually

pushed onto the stack is the address of the character array. If the called

procedure uses this parameter to store something into the character array, it

does modify the array in the calling procedure. The difference between

call-by-value and call-by-reference is quite important for RPC, as we shall

see.

One other parameter passing

mechanism also exists, although it is not used in C. It is called

call-by-copy/restore. It consists of having the variable copied to the stack by

the caller, as in call-by-value, and then copied back after the call,

overwriting the caller's original value. Under most conditions, this achieves

exactly the same effect as call-by-reference, but in some situations, such as

the same parameter being present multiple times in the parameter list, the semantics

are different. The call-by-copy/restore mechanism is not used in many

languages.

The decision of which

parameter passing mechanism to use is normally made by the language designers

and is a fixed property of the language. Sometimes it depends on the data type

being passed. In C, for example, integers and other scalar types are always

passed by value, whereas arrays are always passed by reference, as we have

seen. Some Ada compilers use copy/restore for in out parameters, but others use

call-by-reference. The language definition permits either choice, which makes

the semantics a bit fuzzy.

[Page 128]

Client and Server Stubs

The idea behind RPC is to

make a remote procedure call look as much as possible like a local one. In

other words, we want RPC to be transparent—the calling procedure should not be

aware that the called procedure is executing on a different machine or vice

versa. Suppose that a program needs to read some data from a file. The

programmer puts a call to read in the code to get the data. In a traditional

(single-processor) system, the read routine is extracted from the library by

the linker and inserted into the object program. It is a short procedure, which

is generally implemented by calling an equivalent read system call. In other words,

the read procedure is a kind of interface between the user code and the local

operating system.

Even though read does a

system call, it is called in the usual way, by pushing the parameters onto the

stack, as shown in Fig. 4-5(b). Thus the programmer does not know that read is

actually doing something fishy.

RPC achieves its

transparency in an analogous way. When read is actually a remote procedure

(e.g., one that will run on the file server's machine), a different version of

read, called a client stub, is put into the library. Like the original one, it,

too, is called using the calling sequence of Fig. 4-5(b). Also like the

original one, it too, does a call to the local operating system. Only unlike

the original one, it does not ask the operating system to give it data.

Instead, it packs the parameters into a message and requests that message to be

sent to the server as illustrated in Fig. 4-6. Following the call to send, the

client stub calls receive, blocking itself until the reply comes back.

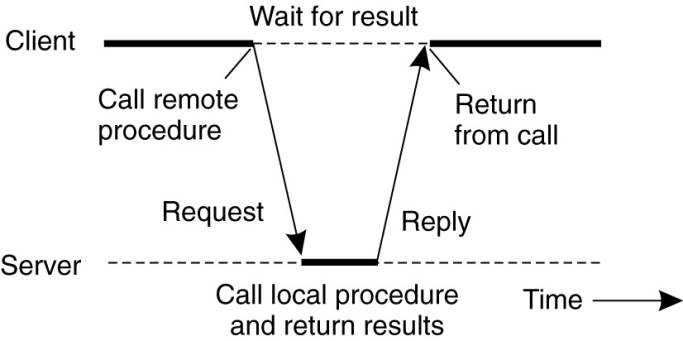

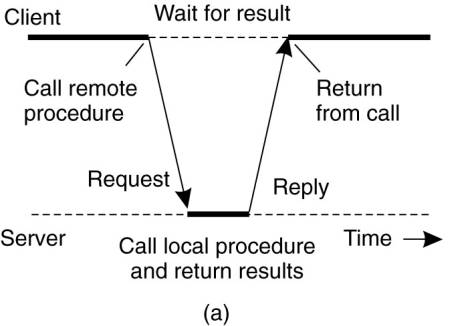

Figure 4-6. Principle of RPC

between a client and server program.

When the message arrives at

the server, the server's operating system passes it up to a server stub. A

server stub is the server-side equivalent of a client stub: it is a piece of

code that transforms requests coming in over the network into local procedure

calls. Typically the server stub will have called receive and be blocked

waiting for incoming messages. The server stub unpacks the parameters from the

message and then calls the server procedure in the usual way (i.e., as in Fig.

4-5). From the server's point of view, it is as though it is being called

directly by the client—the parameters and return address are all on the stack

where they belong and nothing seems unusual. The server performs its work and

then returns the result to the caller in the usual way. For example, in the

case of read, the server will fill the buffer, pointed to by the second

parameter, with the data. This buffer will be internal to the server stub.

[Page 129]

When the server stub gets

control back after the call has completed, it packs the result (the buffer) in

a message and calls send to return it to the client. After that, the server

stub usually does a call to receive again, to wait for the next incoming request.

When the message gets back

to the client machine, the client's operating system sees that it is addressed

to the client process (or actually the client stub, but the operating system

cannot see the difference). The message is copied to the waiting buffer and the

client process unblocked. The client stub inspects the message, unpacks the

result, copies it to its caller, and returns in the usual way. When the caller

gets control following the call to read, all it knows is that its data are

available. It has no idea that the work was done remotely instead of by the

local operating system.

This blissful ignorance on

the part of the client is the beauty of the whole scheme. As far as it is

concerned, remote services are accessed by making ordinary (i.e., local)

procedure calls, not by calling send and receive. All the details of the

message passing are hidden away in the two library procedures, just as the

details of actually making system calls are hidden away in traditional

libraries.

To summarize, a remote

procedure call occurs in the following steps:

1. The client procedure calls the client

stub in the normal way.

2. The client stub builds a message and

calls the local operating system.

3. The client's OS sends the message to the

remote OS.

4. The remote OS gives the message to the

server stub.

5. The server stub unpacks the parameters

and calls the server.

6. The server does the work and returns the

result to the stub.

7. The server stub packs it in a message

and calls its local OS.

8. The server's OS sends the message to the

client's OS.

9. The client's OS gives the message to the

client stub.

10.

The stub unpacks the result and

returns to the client.

The net effect of all these

steps is to convert the local call by the client procedure to the client stub,

to a local call to the server procedure without either client or server being

aware of the intermediate steps or the existence of the network.

[Page 130]

4.2.2. Parameter Passing

The function of the client

stub is to take its parameters, pack them into a message, and send them to the

server stub. While this sounds straightforward, it is not quite as simple as it

at first appears. In this section we will look at some of the issues concerned

with parameter passing in RPC systems.

Passing Value Parameters

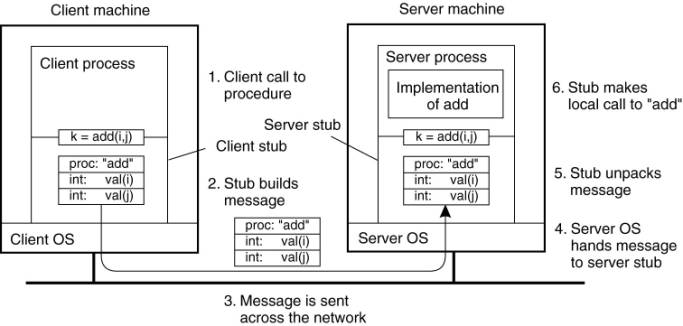

Packing parameters into a

message is called parameter marshaling. As a very simple example, consider a

remote procedure, add(i, j), that takes two integer parameters i and j and returns

their arithmetic sum as a result. (As a practical matter, one would not

normally make such a simple procedure remote due to the overhead, but as an

example it will do.) The call to add, is shown in the left-hand portion (in the

client process) in Fig. 4-7. The client stub takes its two parameters and puts

them in a message as indicated. It also puts the name or number of the

procedure to be called in the message because the server might support several

different calls, and it has to be told which one is required.

Figure 4-7. The steps

involved in a doing a remote computation through RPC.

When the message arrives at

the server, the stub examines the message to see which procedure is needed and

then makes the appropriate call. If the server also supports other remote

procedures, the server stub might have a switch statement in it to select the

procedure to be called, depending on the first field of the message. The actual

call from the stub to the server looks like the original client call, except

that the parameters are variables initialized from the incoming message.

When the server has

finished, the server stub gains control again. It takes the result sent back by

the server and packs it into a message. This message is sent back back to the client

stub, which unpacks it to extract the result and returns the value to the

waiting client procedure.

[Page 131]

As long as the client and

server machines are identical and all the parameters and results are scalar

types, such as integers, characters, and Booleans, this model works fine.

However, in a large distributed system, it is common that multiple machine

types are present. Each machine often has its own representation for numbers,

characters, and other data items. For example, IBM mainframes use the EBCDIC

character code, whereas IBM personal computers use ASCII. As a consequence, it

is not possible to pass a character parameter from an IBM PC client to an IBM

mainframe server using the simple scheme of Fig. 4-7: the server will interpret

the character incorrectly.

Similar problems can occur

with the representation of integers (one's complement versus two's complement)

and floating-point numbers. In addition, an even more annoying problem exists

because some machines, such as the Intel Pentium, number their bytes from right

to left, whereas others, such as the Sun SPARC, number them the other way. The

Intel format is called little endian and the SPARC format is called big endian,

after the politicians in Gulliver's Travels who went to war over which end of

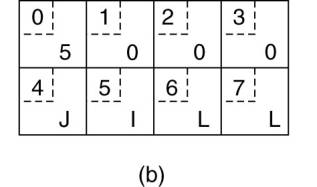

an egg to break (Cohen, 1981). As an example, consider a procedure with two

parameters, an integer and a four-character string. Each parameter requires one

32-bit word. Fig. 4-8(a) shows what the parameter portion of a message built by

a client stub on an Intel Pentium might look like. The first word contains the

integer parameter, 5 in this case, and the second contains the string

"JILL."

Figure 4-8. (a) The original

message on the Pentium. (b) The message after receipt on the SPARC. (c) The message

after being inverted. The little numbers in boxes indicate the address of each

byte.

Since messages are

transferred byte for byte (actually, bit for bit) over the network, the first

byte sent is the first byte to arrive. In Fig. 4-8(b) we show what the message

of Fig. 4-8(a) would look like if received by a SPARC, which numbers its bytes

with byte 0 at the left (high-order byte) instead of at the right (low-order

byte) as do all the Intel chips. When the server stub reads the parameters at addresses

0 and 4, respectively, it will find an integer equal to 83,886,080 (5 x 224)

and a string "JILL".

One obvious, but

unfortunately incorrect, approach is to simply invert the bytes of each word

after they are received, leading to Fig. 4-8(c). Now the integer is 5 and the

string is "LLIJ". The problem here is that integers are reversed by

the different byte ordering, but strings are not. Without additional

information about what is a string and what is an integer, there is no way to

repair the damage.

[Page 132]

Passing Reference Parameters

We now come to a difficult

problem: How are pointers, or in general, references passed? The answer is:

only with the greatest of difficulty, if at all. Remember that a pointer is meaningful

only within the address space of the process in which it is being used. Getting

back to our read example discussed earlier, if the second parameter (the

address of the buffer) happens to be 1000 on the client, one cannot just pass

the number 1000 to the server and expect it to work. Address 1000 on the server

might be in the middle of the program text.

One solution is just to

forbid pointers and reference parameters in general. However, these are so

important that this solution is highly undesirable. In fact, it is not

necessary either. In the read example, the client stub knows that the second

parameter points to an array of characters. Suppose, for the moment, that it

also knows how big the array is. One strategy then becomes apparent: copy the

array into the message and send it to the server. The server stub can then call

the server with a pointer to this array, even though this pointer has a

different numerical value than the second parameter of read has. Changes the

server makes using the pointer (e.g., storing data into it) directly affect the

message buffer inside the server stub. When the server finishes, the original

message can be sent back to the client stub, which then copies it back to the

client. In effect, call-by-reference has been replaced by copy/restore.

Although this is not always identical, it frequently is good enough.

One optimization makes this

mechanism twice as efficient. If the stubs know whether the buffer is an input

parameter or an output parameter to the server, one of the copies can be

eliminated. If the array is input to the server (e.g., in a call to write) it

need not be copied back. If it is output, it need not be sent over in the first

place.

As a final comment, it is

worth noting that although we can now handle pointers to simple arrays and

structures, we still cannot handle the most general case of a pointer to an

arbitrary data structure such as a complex graph. Some systems attempt to deal

with this case by actually passing the pointer to the server stub and generating

special code in the server procedure for using pointers. For example, a request

may be sent back to the client to provide the referenced data.

Parameter Specification and Stub Generation

From what we have explained

so far, it is clear that hiding a remote procedure call requires that the

caller and the callee agree on the format of the messages they exchange, and

that they follow the same steps when it comes to, for example, passing complex

data structures. In other words, both sides in an RPC should follow the same

protocol or the RPC will not work correctly.

[Page 133]

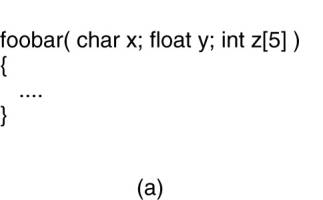

As a simple example,

consider the procedure of Fig. 4-9(a). It has three parameters, a character, a

floating-point number, and an array of five integers. Assuming a word is four

bytes, the RPC protocol might prescribe that we should transmit a character in

the rightmost byte of a word (leaving the next 3 bytes empty), a float as a

whole word, and an array as a group of words equal to the array length,

preceded by a word giving the length, as shown in Fig. 4-9(b). Thus given these

rules, the client stub for foobar knows that it must use the format of Fig.

4-9(b), and the server stub knows that incoming messages for foobar will have

the format of Fig. 4-9(b).

Figure 4-9. (a) A procedure.

(b) The corresponding message.

Defining the message format

is one aspect of an RPC protocol, but it is not sufficient. What we also need

is the client and the server to agree on the representation of simple data structures,

such as integers, characters, Booleans, etc. For example, the protocol could

prescribe that integers are represented in two's complement, characters in

16-bit Unicode, and floats in the IEEE standard #754 format, with everything

stored in little endian. With this additional information, messages can be

unambiguously interpreted.

With the encoding rules now

pinned down to the last bit, the only thing that remains to be done is that the

caller and callee agree on the actual exchange of messages. For example, it may

be decided to use a connection-oriented transport service such as TCP/IP. An

alternative is to use an unreliable datagram service and let the client and

server implement an error control scheme as part of the RPC protocol. In

practice, several variants exist.

Once the RPC protocol has

been fully defined, the client and server stubs need to be implemented.

Fortunately, stubs for the same protocol but different procedures normally

differ only in their interface to the applications. An interface consists of a

collection of procedures that can be called by a client, and which are

implemented by a server. An interface is usually available in the same

programing language as the one in which the client or server is written

(although this is strictly speaking, not necessary). To simplify matters,

interfaces are often specified by means of an Interface Definition Language

(IDL). An interface specified in such an IDL is then subsequently compiled into

a client stub and a server stub, along with the appropriate compile-time or

run-time interfaces.

[Page 134]

Practice shows that using an

interface definition language considerably simplifies client-server

applications based on RPCs. Because it is easy to fully generate client and server

stubs, all RPC-based middleware systems offer an IDL to support application

development. In some cases, using the IDL is even mandatory, as we shall see in

later chapters.

4.2.3. Asynchronous RPC

As in conventional procedure

calls, when a client calls a remote procedure, the client will block until a

reply is returned. This strict request-reply behavior is unnecessary when there

is no result to return, and only leads to blocking the client while it could

have proceeded and have done useful work just after requesting the remote

procedure to be called. Examples of where there is often no need to wait for a

reply include: transferring money from one account to another, adding entries

into a database, starting remote services, batch processing, and so on.

To support such situations,

RPC systems may provide facilities for what are called asynchronous RPCs, by

which a client immediately continues after issuing the RPC request. With

asynchronous RPCs, the server immediately sends a reply back to the client the

moment the RPC request is received, after which it calls the requested

procedure. The reply acts as an acknowledgment to the client that the server is

going to process the RPC. The client will continue without further blocking as

soon as it has received the server's acknowledgment. Fig. 4-10(b) shows how

client and server interact in the case of asynchronous RPCs. For comparison,

Fig. 4-10(a) shows the normal request-reply behavior.

Figure 4-10. (a) The

interaction between client and server in a traditional RPC. (b) The interaction

using asynchronous RPC.

(This item is displayed on

page 135 in the print version)

Asynchronous RPCs can also

be useful when a reply will be returned but the client is not prepared to wait

for it and do nothing in the meantime. For example, a client may want to

prefetch the network addresses of a set of hosts that it expects to contact

soon. While a naming service is collecting those addresses, the client may want

to do other things. In such cases, it makes sense to organize the communication

between the client and server through two asynchronous RPCs, as shown in Fig.

4-11. The client first calls the server to hand over a list of host names that

should be looked up, and continues when the server has acknowledged the receipt

of that list. The second call is done by the server, who calls the client to

hand over the addresses it found. Combining two asynchronous RPCs is sometimes

also referred to as a deferred synchronous RPC.

Figure 4-11. A client and

server interacting through two asynchronous RPCs.

(This item is displayed on

page 135 in the print version)

It should be noted that

variants of asynchronous RPCs exist in which the client continues executing

immediately after sending the request to the server. In other words, the client

does not wait for an acknowledgment of the server's acceptance of the request.

We refer to such RPCs as one-way RPCs. The problem with this approach is that

when reliability is not guaranteed, the client cannot know for sure whether or not

its request will be processed. We return to these matters in Chap. 8. Likewise,

in the case of deferred synchronous RPC, the client may poll the server to see

whether the results are available yet instead of letting the server calling

back the client.

[Page 135]

4.2.4. Example: DCE RPC

Remote procedure calls have

been widely adopted as the basis of middleware and distributed systems in

general. In this section, we take a closer look at one specific RPC system: the

Distributed Computing Environment (DCE), which was developed by the Open

Software Foundation (OSF), now called The Open Group. DCE RPC is not as popular

as some other RPC systems, notably Sun RPC. However, DCE RPC is nevertheless

representative of other RPC systems, and its specifications have been adopted

in Microsoft's base system for distributed computing, DCOM (Eddon and Eddon,

1998). We start with a brief introduction to DCE, after which we consider the

principal workings of DCE RPC. Detailed technical information on how to develop

RPC-based applications can be found in Stevens (1999).

[Page 136]

Introduction to DCE

DCE is a true middleware

system in that it is designed to execute as a layer of abstraction between

existing (network) operating systems and distributed applications. Initially

designed for UNIX, it has now been ported to all major operating systems

including VMS and Windows variants, as well as desktop operating systems. The

idea is that the customer can take a collection of existing machines, add the

DCE software, and then be able to run distributed applications, all without

disturbing existing (nondistributed) applications. Although most of the DCE

package runs in user space, in some configurations a piece (part of the

distributed file system) must be added to the kernel. The Open Group itself

only sells source code, which vendors integrate into their systems.

The programming model

underlying all of DCE is the client-server model, which was extensively

discussed in the previous chapter. User processes act as clients to access remote

services provided by server processes. Some of these services are part of DCE

itself, but others belong to the applications and are written by the

applications programmers. All communication between clients and servers takes

place by means of RPCs.

There are a number of

services that form part of DCE itself. The distributed file service is a

worldwide file system that provides a transparent way of accessing any file in

the system in the same way. It can either be built on top of the hosts' native

file systems or used instead of them. The directory service is used to keep

track of the location of all resources in the system. These resources include

machines, printers, servers, data, and much more, and they may be distributed

geographically over the entire world. The directory service allows a process to

ask for a resource and not have to be concerned about where it is, unless the

process cares. The security service allows resources of all kinds to be

protected, so access can be restricted to authorized persons. Finally, the

distributed time service is a service that attempts to keep clocks on the

different machines globally synchronized. As we shall see in later chapters,

having some notion of global time makes it much easier to ensure consistency in

a distributed system.

Goals of DCE RPC

The goals of the DCE RPC

system are relatively traditional. First and foremost, the RPC system makes it

possible for a client to access a remote service by simply calling a local

procedure. This interface makes it possible for client (i.e., application)

programs to be written in a simple way, familiar to most programmers. It also

makes it easy to have large volumes of existing code run in a distributed

environment with few, if any, changes.

[Page 137]

It is up to the RPC system

to hide all the details from the clients, and, to some extent, from the servers

as well. To start with, the RPC system can automatically locate the correct

server, and subsequently set up the communication between client and server

software (generally called binding). It can also handle the message transport

in both directions, fragmenting and reassembling them as needed (e.g., if one

of the parameters is a large array). Finally, the RPC system can automatically

handle data type conversions between the client and the server, even if they

run on different architectures and have a different byte ordering.

As a consequence of the RPC

system's ability to hide the details, clients and servers are highly

independent of one another. A client can be written in Java and a server in C,

or vice versa. A client and server can run on different hardware platforms and

use different operating systems. A variety of network protocols and data

representations are also supported, all without any intervention from the client

or server.

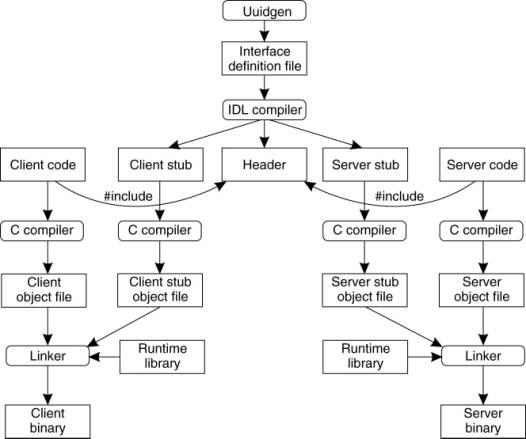

Writing a Client and a Server

The DCE RPC system consists

of a number of components, including languages, libraries, daemons, and utility

programs, among others. Together these make it possible to write clients and

servers. In this section we will describe the pieces and how they fit together.

The entire process of writing and using an RPC client and server is summarized

in Fig. 4-12.

In a client-server system,

the glue that holds everything together is the interface definition, as specified

in the Interface Definition Language, or IDL. It permits procedure declarations

in a form closely resembling function prototypes in ANSI C. IDL files can also

contain type definitions, constant declarations, and other information needed

to correctly marshal parameters and unmarshal results. Ideally, the interface

definition should also contain a formal definition of what the procedures do,

but such a definition is beyond the current state of the art, so the interface

definition just defines the syntax of the calls, not their semantics. At best

the writer can add a few comments describing what the procedures do.

A crucial element in every

IDL file is a globally unique identifier for the specified interface. The

client sends this identifier in the first RPC message and the server verifies

that it is correct. In this way, if a client inadvertently tries to bind to the

wrong server, or even to an older version of the right server, the server will

detect the error and the binding will not take place.

Interface definitions and

unique identifiers are closely related in DCE. As illustrated in Fig. 4-12, the

first step in writing a client/server application is usually calling the

uuidgen program, asking it to generate a prototype IDL file containing an

interface identifier guaranteed never to be used again in any interface

generated anywhere by uuidgen. Uniqueness is ensured by encoding in it the

location and time of creation. It consists of a 128-bit binary number

represented in the IDL file as an ASCII string in hexadecimal.

[Page 138]

Figure 4-12. The steps in

writing a client and a server in DCE RPC.

The next step is editing the

IDL file, filling in the names of the remote procedures and their parameters. It

is worth noting that RPC is not totally transparent—for example, the client and

server cannot share global variables—but the IDL rules make it impossible to

express constructs that are not supported.

When the IDL file is

complete, the IDL compiler is called to process it. The output of the IDL

compiler consists of three files:

1. A header file (e.g., interface.h, in C terms).

2. The client stub.

3. The server stub.

The header file contains the

unique identifier, type definitions, constant definitions, and function

prototypes. It should be included (using #include) in both the client and

server code. The client stub contains the actual procedures that the client

program will call. These procedures are the ones responsible for collecting and

packing the parameters into the outgoing message and then calling the runtime

system to send it. The client stub also handles unpacking the reply and

returning values to the client. The server stub contains the procedures called

by the runtime system on the server machine when an incoming message arrives.

These, in turn, call the actual server procedures that do the work.

[Page 139]

The next step is for the

application writer to write the client and server code. Both of these are then

compiled, as are the two stub procedures. The resulting client code and client

stub object files are then linked with the runtime library to produce the

executable binary for the client. Similarly, the server code and server stub

are compiled and linked to produce the server's binary. At runtime, the client

and server are started so that the application is actually executed as well.

Binding a Client to a Server

To allow a client to call a

server, it is necessary that the server be registered and prepared to accept

incoming calls. Registration of a server makes it possible for a client to

locate the server and bind to it. Server location is done in two steps:

1. Locate the server's machine.

2. Locate the server (i.e., the correct

process) on that machine.

The second step is somewhat

subtle. Basically, what it comes down to is that to communicate with a server,

the client needs to know an end point, on the server's machine to which it can

send messages. An end point (also commonly known as a port) is used by the

server's operating system to distinguish incoming messages for different

processes. In DCE, a table of (server, end point) pairs is maintained on each

server machine by a process called the DCE daemon. Before it becomes available

for incoming requests, the server must ask the operating system for an end

point. It then registers this end point with the DCE daemon. The DCE daemon

records this information (including which protocols the server speaks) in the

end point table for future use.

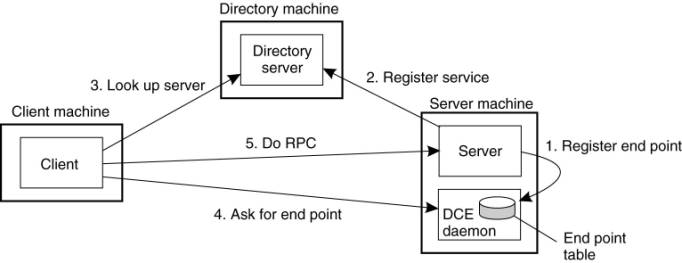

The server also registers

with the directory service by providing it the network address of the server's

machine and a name under which the server can be looked up. Binding a client to

a server then proceeds as shown in Fig. 4-13.

Figure 4-13.

Client-to-server binding in DCE.

(This item is displayed on page

140 in the print version)

Let us assume that the

client wants to bind to a video server that is locally known under the name

/local/multimedia/video/movies. It passes this name to the directory server,

which returns the network address of the machine running the video server. The

client then goes to the DCE daemon on that machine (which has a well-known end

point), and asks it to look up the end point of the video server in its end

point table. Armed with this information, the RPC can now take place. On

subsequent RPCs this lookup is not needed. DCE also gives clients the ability

to do more sophisticated searches for a suitable server when that is needed.

Secure RPC is also an option where confidentiality or data integrity is

crucial.

[Page 140]

Performing an RPC

The actual RPC is carried

out transparently and in the usual way. The client stub marshals the parameters

to the runtime library for transmission using the protocol chosen at binding

time. When a message arrives at the server side, it is routed to the correct

server based on the end point contained in the incoming message. The runtime

library passes the message to the server stub, which unmarshals the parameters

and calls the server. The reply goes back by the reverse route.

DCE provides several

semantic options. The default is at-most-once operation, in which case no call

is ever carried out more than once, even in the face of system crashes. In

practice, what this means is that if a server crashes during an RPC and then

recovers quickly, the client does not repeat the operation, for fear that it

might already have been carried out once.

Alternatively, it is

possible to mark a remote procedure as idempotent (in the IDL file), in which

case it can be repeated multiple times without harm. For example, reading a

specified block from a file can be tried over and over until it succeeds. When

an idempotent RPC fails due to a server crash, the client can wait until the

server reboots and then try again. Other semantics are also available (but rarely

used), including broadcasting the RPC to all the machines on the local network.

We return to RPC semantics in Chap. 8, when discussing RPC in the presence of

failures.

4.3. Message-Oriented Communication

Remote procedure calls and

remote object invocations contribute to hiding communication in distributed

systems, that is, they enhance access transparency. Unfortunately, neither

mechanism is always appropriate. In particular, when it cannot be assumed that

the receiving side is executing at the time a request is issued, alternative

communication services are needed. Likewise, the inherent synchronous nature of

RPCs, by which a client is blocked until its request has been processed,

sometimes needs to be replaced by something else.

[Page 141]

That something else is

messaging. In this section we concentrate on message-oriented communication in

distributed systems by first taking a closer look at what exactly synchronous

behavior is and what its implications are. Then, we discuss messaging systems

that assume that parties are executing at the time of communication. Finally,

we will examine message-queuing systems that allow processes to exchange

information, even if the other party is not executing at the time communication

is initiated.

4.3.1. Message-Oriented Transient Communication

Many distributed systems and

applications are built directly on top of the simple message-oriented model

offered by the transport layer. To better understand and appreciate the

message-oriented systems as part of middleware solutions, we first discuss

messaging through transport-level sockets.

Berkeley Sockets

Special attention has been

paid to standardizing the interface of the transport layer to allow programmers

to make use of its entire suite of (messaging) protocols through a simple set

of primitives. Also, standard interfaces make it easier to port an application

to a different machine.

As an example, we briefly

discuss the sockets interface as introduced in the 1970s in Berkeley UNIX.

Another important interface is XTI, which stands for the X/Open Transport

Interface, formerly called the Transport Layer Interface (TLI), and developed

by AT&T. Sockets and XTI are very similar in their model of network programming,

but differ in their set of primitives.

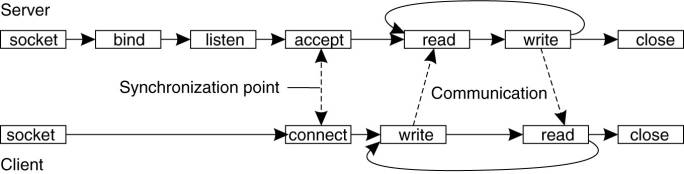

Conceptually, a socket is a

communication end point to which an application can write data that are to be

sent out over the underlying network, and from which incoming data can be read.

A socket forms an abstraction over the actual communication end point that is

used by the local operating system for a specific transport protocol. In the

following text, we concentrate on the socket primitives for TCP, which are

shown in Fig. 4-14.

Figure 4-14. The socket

primitives for TCP/IP.

(This item is displayed on

page 142 in the print version)

|

Primitive |

Meaning |

|

Socket |

Create a new communication

end point |

|

Bind |

Attach a local address to

a socket |

|

Listen |

Announce willingness to

accept connections |

|

Accept |

Block caller until a

connection request arrives |

|

Connect |

Actively attempt to

establish a connection |

|

Send |

Send some data over the

connection |

|

Receive |

Receive some data over the

connection |

|

Close |

Release the connection |

Servers generally execute

the first four primitives, normally in the order given. When calling the socket

primitive, the caller creates a new communication end point for a specific

transport protocol. Internally, creating a communication end point means that

the local operating system reserves resources to accommodate sending and

receiving messages for the specified protocol.

The bind primitive

associates a local address with the newly-created socket. For example, a server

should bind the IP address of its machine together with a (possibly well-known)

port number to a socket. Binding tells the operating system that the server

wants to receive messages only on the specified address and port.

[Page 142]

The listen primitive is called

only in the case of connection-oriented communication. It is a nonblocking call

that allows the local operating system to reserve enough buffers for a

specified maximum number of connections that the caller is willing to accept.

A call to accept blocks the

caller until a connection request arrives. When a request arrives, the local

operating system creates a new socket with the same properties as the original

one, and returns it to the caller. This approach will allow the server to, for

example, fork off a process that will subsequently handle the actual

communication through the new connection. The server, in the meantime, can go

back and wait for another connection request on the original socket.

Let us now take a look at

the client side. Here, too, a socket must first be created using the socket

primitive, but explicitly binding the socket to a local address is not

necessary, since the operating system can dynamically allocate a port when the

connection is set up. The connect primitive requires that the caller specifies

the transport-level address to which a connection request is to be sent. The

client is blocked until a connection has been set up successfully, after which

both sides can start exchanging information through the send and receive primitives.

Finally, closing a connection is symmetric when using sockets, and is

established by having both the client and server call the close primitive. The

general pattern followed by a client and server for connection-oriented

communication using sockets is shown in Fig. 4-15. Details about network

programming using sockets and other interfaces in a UNIX environment can be

found in Stevens (1998).

Figure 4-15.

Connection-oriented communication pattern using sockets.

(This item is displayed on

page 143 in the print version)

The Message-Passing

Interface (MPI)

With the advent of

high-performance multicomputers, developers have been looking for

message-oriented primitives that would allow them to easily write highly

efficient applications. This means that the primitives should be at a

convenient level of abstraction (to ease application development), and that

their implementation incurs only minimal overhead. Sockets were deemed

insufficient for two reasons. First, they were at the wrong level of abstraction

by supporting only simple send and receive primitives. Second, sockets had been

designed to communicate across networks using general-purpose protocol stacks

such as TCP/IP. They were not considered suitable for the proprietary protocols

developed for high-speed interconnection networks, such as those used in

high-performance server clusters. Those protocols required an interface that

could handle more advanced features, such as different forms of buffering and

synchronization.

[Page 143]

The result was that most

interconnection networks and high-performance multicomputers were shipped with

proprietary communication libraries. These libraries offered a wealth of

high-level and generally efficient communication primitives. Of course, all

libraries were mutually incompatible, so that application developers now had a

portability problem.

The need to be hardware and

platform independent eventually led to the definition of a standard for message

passing, simply called the Message-Passing Interface or MPI. MPI is designed

for parallel applications and as such is tailored to transient communication.

It makes direct use of the underlying network. Also, it assumes that serious

failures such as process crashes or network partitions are fatal and do not require

automatic recovery.

MPI assumes communication

takes place within a known group of processes. Each group is assigned an

identifier. Each process within a group is also assigned a (local) identifier.

A (groupID, processID) pair therefore uniquely identifies the source or

destination of a message, and is used instead of a transport-level address.

There may be several, possibly overlapping groups of processes involved in a

computation and that are all executing at the same time.

At the core of MPI are messaging

primitives to support transient communication, of which the most intuitive ones

are summarized in Fig. 4-16.

Figure 4-16. Some of the

most intuitive message-passing primitives of MPI.

(This item is displayed on

page 144 in the print version)

|

Primitive |

Meaning |

|

MPI_bsend |

Append outgoing message to

a local send buffer |

|

MPI_send |

Send a message and wait

until copied to local or remote buffer |

|

MPI_ssend |

Send a message and wait

until receipt starts |

|

MPI_sendrecv |

Send a message and wait

for reply |

|

MPI_isend |

Pass reference to outgoing

message, and continue |

|

MPI_issend |

Pass reference to outgoing

message, and wait until receipt starts |

|

MPI_recv |

Receive a message; block

if there is none |

|

MPI_irecv |

Check if there is an

incoming message, but do not block |

Transient asynchronous

communication is supported by means of the MPI_bsend primitive. The sender

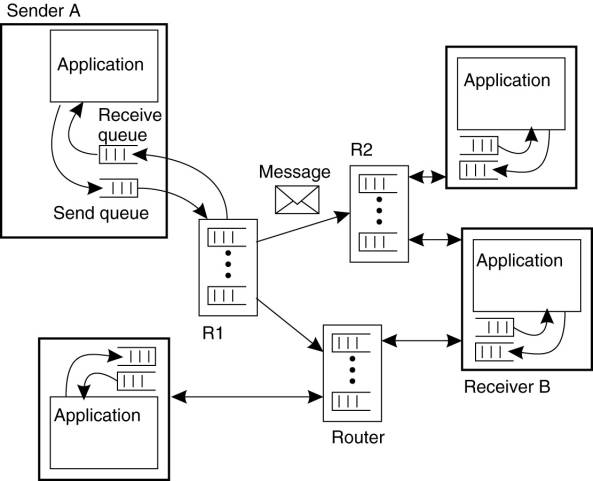

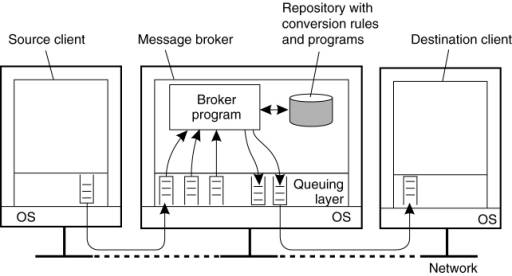

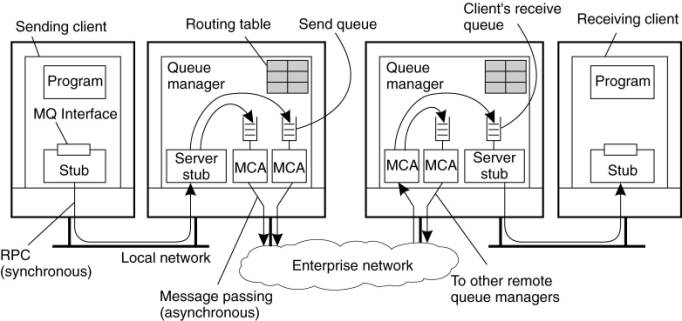

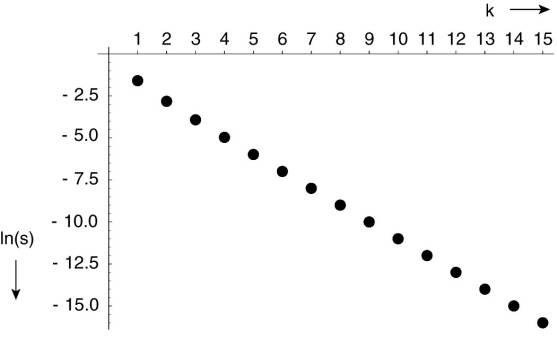

submits a message for transmission, which is generally first copied to a local buffer