|

SE 616 – Introduction to Software Engineering |

|

Lecture 6 |

Chapter

12: User Interface Design

Golden

Rules

- Place

User in Control

- Define interaction in such a way that the user is not

forced into performing unnecessary or undesired actions

- Provide for flexible interaction (users have varying

preferences)

- Allow user interaction to be interruptible and reversible

- Streamline interaction as skill level increases and allow

customization of interaction

- Hide technical internals from the casual user

- Design for direct interaction with objects that appear on

the screen

- Reduce

User Cognitive (Memory) Load

- Reduce demands on user's short-term memory

- Establish meaningful defaults

- Define intuitive short-cuts

- Visual layout of user interface should be based on a

familiar real world metaphor

- Disclose information in a progressive fashion

- Make

Interface Consistent

- Allow user to put the current task into a meaningful

context

- Maintain consistency across a family of applications

- If past interaction models have created user expectations,

do not make changes unless there is a good reason to do so

User

Interface Design Models

- Design model (incorporates data, architectural, interface, and

procedural representations of the software)

- User model (end user profiles - novice, knowledgeable intermittent

user, knowledgeable frequent users)

- User's model

or system perception (user's mental

image of system)

- System image (look and feel of the interface and supporting media)

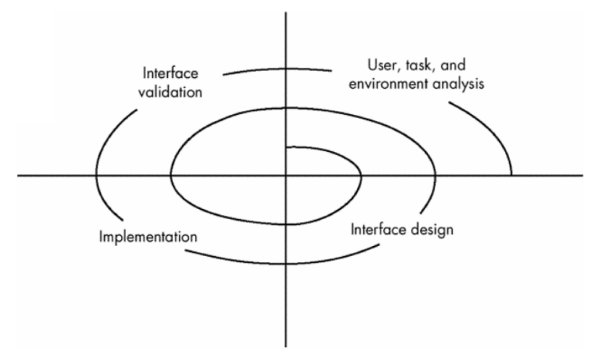

User

Interface Design Process (Spiral Model)

1. User,

task, and environment analysis and modeling

o Where will the interface be located physically?

o Will the user be sitting, standing, or performing other tasks

unrelated to the interface?

o Does the interface hardware accommodate space, light, or noise

constraints?

o Are there special human factors considerations driven by

environmental factors?

2. Interface

design

o define a set of interface objects and actions (and their screen

representations) that enable a user to perform all defined tasks in a manner

that meets every usability goal defined for the system

3. Interface

construction

4. Interface

validation

o the ability of the interface to implement every user task

correctly, to accommodate all task variations, and to achieve all general user

requirements

o the degree to which the interface is easy to use and easy to

learn

o the users' acceptance of the interface as a useful tool in their

work

Task

Analysis and Modeling

- Study

tasks users must complete to accomplish their goal without the computer

and map these into a similar set of tasks that are to be implemented in

the user interface

- Study

existing specification for computer-based solution and derive a set of

tasks that will accommodate the user model, design model, and system

perception

Interface Design Activities

1. Establish the goals and intentions of each task

2. Map each goal/intention to a sequence of specific actions

(objects and methods for manipulating objects)

3. Specify the action sequence of tasks and subtasks (user

scenario)

4. Indicate the state of the system at the time the user scenario

is performed

5. Define control mechanisms

6. Show how control mechanisms affect the state of the system

7. Indicate how the user interprets the state of the system from

information provided through the interface

Interface Design Issues

- System

response time (time between the point at which user initiates some control

action and the time when the system responds)

- User

help facilities (integrated, context sensitive help versus add-on help)

- Error

information handling (messages should be non-judgmental, describe problem

precisely, and suggest valid solutions)

- Command

labeling (based on user vocabulary, simple grammar, and have consistent

rules for abbreviation)

User Interface Implementation Tools

- User-interface

toolkits or user-interface development systems (UIDS)

- Provide

components or objects that facilitate creation of windows, menus, device

interaction, error messages, commands, and many other elements of an

interactive environment

- E.G.

- managing input devices (such as a mouse or keyboard)

- validating user input

- handling errors and displaying error messages

- providing feedback (e.g., automatic input echo)

- providing help and prompts

- handling windows and fields, scrolling within windows

- establishing connections between application software and

the interface

- insulating the application from interface management functions

- allowing the user to customize the interface

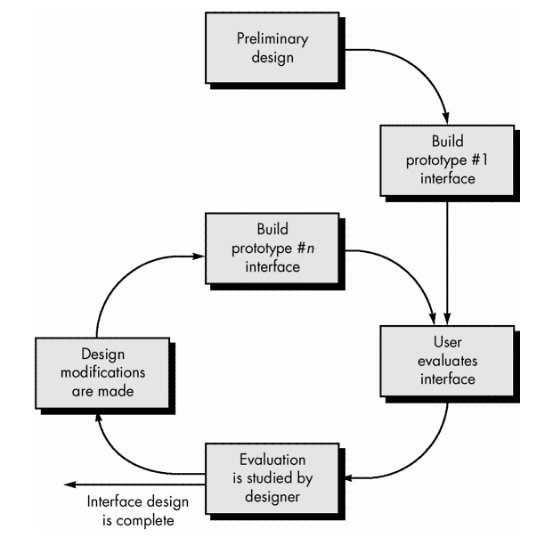

User

Interface Evaluation Cycle

1. Preliminary design

2. Build first interface prototype

3. User evaluates interface

4. Evaluation studied by designer

5. Design modifications made

6. Build next prototype

7. If interface is not complete then go to step 3

User Interface Design Evaluation

Criteria

- Length

and complexity of written interface specification provide an indication of

amount of learning required by system users

- Number

of user tasks and the average number of actions per task provide an

indication of interaction time and overall system efficiency

- Number

of tasks, actions, and system states in the design model provide an indication

of the memory load required of system users

- Interface

style, help facilities, and error handling protocols provide a general

indication of system complexity and the degree of acceptance by the users

Understanding

Interfaces

Don

Norman's The Design of Everyday Things

Analyzes

common objects (door, toaster, VCR, telephone)

Concepts

of Good/Bad Design

- Affordances

Perceived

properties of an artifact that determines how it can be used (e.g

knobs/buttons/slots)

- Constraints

Physical, semantic,

cultural, and logical factors that encourage proper actions

- Conceptual

Models

Mental model of

system which allows users to:

- understand the system

- predict the effects of actions

- interpret results

- Mappings

Describe

relationship between controls and their effects on system

- Visibility

The system shows

you the conceptual model by showing its state and actions that can be taken

- Feedback

Information about

effects of user's actions

Norman's Seven

Stages of Action that explain how people do things:

1. Form

a goal

2. Form

the intention

3. Specify

an action

4. Execute

the action

5. Perceive

the state of the world

6. Interpret

the state of the world

7. Evaluate

the outcome

Norman's Prescription for User-Centered Design:

- Make it

easy to know what actions are possible at any time

- Make

thing visible:

- the

conceptual model

- alternative

actions

- results

of actions

1. Make

it easy to evaluate the current state of the system

2. Follow

natural mappings

o between

actions and effects

o between

visible information and interpretation of system state

Norman's Questions about artifacts:

1. What

is the system's function?

2. What

system actions are possible?

3. What

are the mappings from intention to execution?

4. Does

the device inform the user about what state it is in?

5. Can

the user determine tell what state the system is in?

Design and Evaluation of Software

Past:

- Design

- Build

- Test

Present:

· Interactive Design?

- Evaluate:

- Existing System

- Current Work

Practices and Products

- Output:

- Rapid

Prototypes and Mockups

- Alpha &

Beta Versions

What

skills are required to design for users?

- Engineering

- Programming

- Cognitive Psychology

- Human Factors

- Anthropology

- Graphics Design

- More?

Discipline of Human-Computer Interaction Design

Questions

asked:

· How can knowledge of technology and user's needs

be synthesized into an appropriate design?

· What process should be used?

· How can it be done effectively?

Answers: Found In:

· Principles - collection of statements that advise

designer how to proceed

· Guidelines - collections of tests that can be

applied to interface to determine if OK.

· Methodologies - Formalized procedures that are

believed to guide and structure process of effective design.

Design Principles

Hansen(1971) - Four Principles

- Know the user

- Optimizes operations

- Minimize memorization

- Engineer for errors

Rubinstein

& Herry (1984) - 93 principles

Heckel (1991) - 30 design elements

Shneiderman (1992) - Eight golden rules:

- Strive for consistency

- Enable frequent users to use

shortcut

- Offer informative feedback

- Design dialogues to yield closure

- Offer simple error handling

- Permit easy reversal of actions

- Support internal locus of control

- Reduce short-term memory load

Gould

and Lowes (1985) - Principles:

- Early focus on users

- Designers

should have direct contact with intended or actual users

- Early - and continual - user

testing

- Only feasible

approach to successful design is empirical through observation

measurement, enhancement

- Interactive design

- Design,

implementation, testing, feedback, evaluation, change iterative.

Design Methodologies

Gould's

design process

Informal

methodology - 4 Phases:

- gearing up

phase

- learn about

related systems for good starting points

- learn about

user interaction standards, guidelines, and development procedures

- initial design

phase

- preliminary

specification of user interface

- collect

information about users and work

- develop

testable behavior goals

- organize work

to be done

- iterative

development phase

- continuous

evaluation and feedback based on user feedback

- system

installation phase

- techniques for

installing, introducing, marketing , etc.

NOTE:

- not

"cookbook" approach

- others

have design processes all are variations on theme.

Nielsen (1993) Develops "usability

engineering lifecycle model" - 11 stages:

- Know the user

- each user's

characterization

- user's current

& desired tasks

- functional

analyses

- evolution of

user and job

- Competitive analysis

- Setting usability goal &

Financial impact analysis

- Parallel design

- Participatory design

- Coordinated design and total

interface

- Apply guidelines and heuristic

analysis

- Prototyping

- Empirical testing

- Iterative design to capture

design rationale

- Collect feedback from field use

Nielsen's

comments:

- Competitive analysis can be cost

effective way of studying and evaluating approaches to product functioning

and interface design

- Set quantitative usability goals

- (e.g. number of

user errors per hour

- level of

satisfaction expressed by users)

- Parallel design useful in brainstorming

- Participatory design - users become

designers

- Constancy of UI achieved through

corporate standards and GUI guidelines

Lewis

& Rieman (1993) - shareware/internet book - Task-centered design process

- figure out who's going to use the

system to do what

- choose representation tasks for

task-centered design

- plagiarize

- rough out design

- think about it

- create a mockup or prototype

- test it with users

- iterate

- build it

- tweak it

- change it

Their

comments:

- Suggest that designers work

within existing interface frameworks

- Incorporate existing applications

within their solutions

- Copy interaction techniques from

other systems

Getting

to know users and their tasks

Lewis & Rieman -

- Close personal contact

- Profile user's:

- age,

- education

- computer task

literacy and experience

- reading and

typing ability

- attitude,

motivation

- Choose representative tasks that

provide functional coverage

- Profile jobs

and tasks:

- structure and

importance

- Level of

training and support provided

- Nature of

system use (e.g. regular, mandatory)

- Produce scenarios

- Profile

behavior:

- analysis of

social setting (usually requires questionnaires interviews)

Note:

HCI did not come first - human factors came first - task analysis - a study of

what an operator is required to do in terms of actions and/or cognitive

processes, to achieve a goal

Idea Generation

- observation

- listening

- brainstorming

- metaphor

- sketching

- scenario crations

- free association

- mediation

- juxtaposition

- searching for patterns

- "lateral thinking"

Envisionment

and Prototyping

Envisionment of

interfaces - the formulation and exploration of system and interface, ideas and

metaphors at a very early stage of the design process.

Methods of envisionment - stories, scenarios,

storyboards, flipbooks, animated drawings, cutout animation, animation of real

objects, computer animation, computerscripted interactive prototypes.

The Role of Metaphor

Def: a figure of speech in which a word or

phrase denoting one kind of object or action is used in place of another to

suggest a likeness or analogy between them

Metaphors help users understand a target

domain they don't understand in terms of a source domain they already

understand

(e.g.

typewriter - wordprocessors)

Source

Target.

Evaluating

systems and their user interfaces

Joseph McGrath (1994) - framework for

understanding methods of evaluation:

"the phenomenon

of interest involves states and actions of human systems - of individuals,

group, organizations, and larger social entities - and by products of those

assocations"

Terminology:

- "generalizability" of

evidence

- Over population of

"actors"

- "precision" of

measurement

- of the "behaviors"

studied

- "realism" of the

situation

- or "context" where

evidence is gathered

- correlation vs. causation

- randomization, true experiment

and statistical inference

Taxonomy

of research strategies

- Field strategies - studies

systems in use on real talk, real work settings

- Field studies - observing

without interviewing

- Field

experiment

- observe impact of changing aspect of environment of system (e.g. beta

testing products)

- Experimental Strategies - carried

out laboratory

- Experimental

simulations

create real system in lab for experimental purposes by real users

- Laboratory

experiments

- controlled experiments used to study impact of particular parameter.

- Respondent strategies

- Judgment

studies

responses from small set of judges - designed to give information about

stimulus (e.g. Delphi methods)

- Sample surveys - responses

from large set of respondents about respondents (e.g. questionnaires)

- Theoretical strategies

- Formal theory -

gives qualitative insights (e.g. theory of vision)

- Computer

simulation - run on computer to derive predictions about computer

performances.

Five

approaches to system & interface evaluation

- Heuristic evaluation with

usability guidelines

- Must make

guidelines so they are testable.

- Sometimes

difficult to apply guidelines to real design problems

- Difficult to

deal with large set of guidelines

Nielsen

(1994) - 10 Design Heuristics:

(Based

on factor analysis of 249 usability problems on 11 projects)

- Visibility of System State

- Match between system and real world (e.g. speak

user's language)

- User Control and Freedom (e.g. easy exit, undo)

- Consistency and standards (e.g. same words mean

same thing in different contexts)

- Error prevention

- Recognition rather than recall (e.g. options and

actions should be visible)

- Flexibility and efficiency of use (e.g.

accelerators, short cuts, customization)

- Aesthetic and minimalist design

- Help users recognize, diagnose, and recover from

errors (e.g. error messages in plain language)

- Help and Documentation

- Cognitive Walkthroughs

- Inspired by

structured code walkthroughs

- Here - a set of

representative tasks is selected and stepped through -

keystroke-by-keystrokes, menu selection-by-menu selection.

- Developers fill

out forms that require them to specify information about user's goals,

tasks, subtasks, knowledge, visible state of the

interface, and relations and changes analysis.

Questions to ask:

- Will users try

to achieve the right effect?

- Will users

notice that the correct action is available?

- Will users

associate the correct action with th effect

trying to be achieved?

- If the correct

action is performed, will the user see that progress is being made

toward solution of the task?

- Theory

underlying cognitive walkthroughs based on psychology of inexperienced

users.

- Approach is

intended to evaluate a design for ease of learning

- Cognitive

walkthroughs suggest specific psychological explanations for problems

thereby pointing developers toward solutions.

- Usability testing

- Cognitive

walkthroughs rely on intuition of interface specialists

- Need to be

augmented with experience

- Participants

are recorded

- "Errors"

identified

- Make interface

changes

- Videotaping

users trying to use system

- "thinking

aloud technique"

- Usability Engineering

·

User testing that is more

formal in the sense that interface specialists set explicit quantitative

performance goals known as metrics

·

e.g. new users must be

able to create and save forms in first 10 mins.

·

Arguments for explicit

quantitative goals:

·

Human factors engineers

taken more seriously in engineering setting because they adopt quantitative,

objective goals familiar to engineers

·

Progress can be charted and

success recognized

- Controlled Experiments

- Adv:

- Studying

individual behavior without influence of groups

- Focus on

studying low-level perceptual cognitive or motor activities

- Types of

designs:

- Within-subjects:

each subject experiences the different options being tested

- Between-subjects:

each subject just tries one of the alternatives

Evaluating

Evaluation:

So far

best methods are:

- Heuristic evaluation with empirical

usability testing

- Cognitive walkthrough can be helpful

Possible

Uses of Evaluation Methods in a Development Process:

- Information Collection - e.g.

interviews and questionnaires

- Concept Design - e.g. interviews,

usability testing, controlled testing

- Functionality and Interface

Design - usability testing, human information processing simulations

- Prototype Implementation -

usability testing, heuristic evaluation

- Deliverable System Implementation

- usability testing

- System Enhancement and Evolution

- interaction analysis, interviews and questionnaires, field studies

Theory-based

design

- Most methods ad

hoc need solid theory

- Theories exist

but are they fundamental?

Preserving

Design Rationale

Recording the design history of its rationale

could serve several purposes:

- Lead better design by looking at

the process of considering alternatives

- Useful when modifications

considered.

Usability

Design Process (Gould)

General Observations about system Design:

- Nobody gets it right the first

time.

- Development is full of surprises.

- Developing user-oriented systems

requires living in a sea of change.

- Developers need good tools.

- Even with the best-designed

system, users will make mistakes using it.

Steps

in Designing a Good System

- Define problem customer wants to

be solved.

- Identify tasks user wants to be

performed.

- Learn user capabilities.

- Learn hardware/software

constraints.

- Set specific usability targets.

- Sketch out user scenarios.

- Design and build prototype.

- Test prototype.

- Iteratively identify,

incorporate, and test changes until:

- Behavioral

targets are met

- Critical

deadlines are reached.

- Install system

- Measure customer reaction and

acceptance.

Principles

of Usability Design

Principle 1: Early and Continual Focus on

Users

- Decide who users will be &

what they will be doing with system

- Methods to carry this out:

- Talk with users

- Visit customer

locations

- Find out

problems, difficulties, worries

- Show them what

you had in mind for design

- Assume the

intended users are the experts

- Observe users

working

- Videotape users

working

- Learn about the

work organization

- (might have to

match system design with corporate design)

- Make potential

user think out loud during actual work

- (realtime

connect)

- Try worker's

job yourself

- Make user part

of design team

- Perform task

analysis (step-by-step)

- Use surveys and

questionnaires

- Set testable

behavior target goals

Example:

Twenty experimental participants familiar

with the IBM PC but unfamiliar with query languages will receive 60 minutes

training using the new online query training system for novice users. They will

then perform nine experimental tasks.

On

task 1, 85% of tested users must complete it successfully in less than 15

minutes, with no help from the experimenter. They must use all reference and

help materials, but no quick help. Task 1 consists of 6 steps:

- Create a query

on the displayed query panel using the table SCHOOL.COURSES.

- Delete all

column names except COURSE and TITLE.

- Save the

current query with the name CTITLE.

- Run the query

once.

- Get current

query panel displayed.

- Clear current

query panel so it contains nothing.

|

|

Checklist

for Achieving Early and Continual Focus on Users |

|

__ |

We

defined a major group of potential users. |

|

__ |

We

talked with the users about the good and bad points of their present job and

system. |

|

__ |

Our

preliminary system design discussions always kept in mind the characteristics

of these users. |

|

__ |

We

watched these users performing their present jobs. |

|

__ |

We

asked them to think aloud as they worked. |

|

__ |

We

tried their jobs. |

|

__ |

We

did a formal task analysis. |

|

__ |

We

developed testable behavioral target goals for our proposed system. |

Principle 2: Early - And Continual - User

testing

- First Design is not perfect.

- Must make measurements.

- Methods to carry this out:

- Printed or

video scenarios

- Exact details:

- exact layout

and wording on screen

- What keys to

press, system's response

- Early user

manuals

- Physical

mock-ups

- Simulations -

paper and pencil or computer

- Early

processing - get people to work with system

- Early demos

- Think aloud

- Make videos of

workers using system

- Hallway-and-storefront

methods - put in situ

- Online forums

- Formal

prototype tests with formal behavioral methods

- Try to destroy

contests

- Field studies

- Follow-up

studies

|

|

Checklist

for Achieving Early User Testing |

|

__ |

We

made informal, preliminary sketches of a few user scenarios -- specifying

exactly what the user and system messages will be -- and showed them to a few

prospective users. |

|

__ |

We

have begun writing the user manual, and it is guiding the development

process. |

|

__ |

We

have used simulations to try out functions and organization of the user

interface. |

|

__ |

We

have done early demonstrations. |

|

__ |

We

invited as many people as possible to comment on the on-going instantiations

of all usability components. |

|

__ |

We

had prospective users think aloud as they use simulations, mock-ups, and

prototypes. |

|

__ |

We

use hallway-and-storefront methods. |

|

__ |

We

used computer conferencing forums to get feedback on usability. |

|

__ |

We

did formal prototype user testing. |

|

__ |

We

compared our results to established behavioral target goals. |

|

__ |

We

met our behavioral benchmark targets. |

|

__ |

We

let motivated people try to find bugs in our system. |

|

__ |

We

did field studies. |

|

__ |

We

did follow-up studies on people who are now using the system we made. |

Principle 3: Iterative Design

- Identify required

changes

- Make changes

- Methods to carry this out:

Software Tools (e.g. UIMS)

- Need to make

changes in UI possible and easy

- Brings UI

within control of human factors people not programmers

- Makes work

more productive

- Tools have

inherent conceptual, semantic, and procedural ways of doing things

- Tools

facilitate consistency

|

|

Checklist

for Carrying out Iterative Design |

|

__ |

All

aspects of usability could be easily changed, i.e., we had good tools. |

|

__ |

We

regularly changed our system, manuals, etc., based upon testing results with prospective

users. |

Principle 4: Integrated Design

- ALL Design work evolves in

parallel

|

|

Checklist

for Achieving Integrated Design |

|

__ |

We

considered all aspects of usability in our initial design. |

|

__ |

One

person had responsibility for all aspects of usability. |

|

__ |

User

Manual |

|

__ |

Manuals

for subsidiary groups (e.g. operators, trainers, etc.) |

|

__ |

Identification

of required functions. |

|

__ |

User

Interfaces. |

|

__ |

Assure

adequate system reliability and responsiveness. |

|

__ |

Outreach

programs (e,g, help system, training materials,

hot-lines, videotapes, etc.) |

|

__ |

Installation. |

|

__ |

Customization. |

|

__ |

Field

Maintenance. |

|

__ |

Support-group

users. |