Inductive Concept Learning by Learning Decision Trees

- Goal: Build a decision tree for classifying examples as

positive or negative instances of a concept

- Supervised learning, batch processing of training

examples, using a preference bias

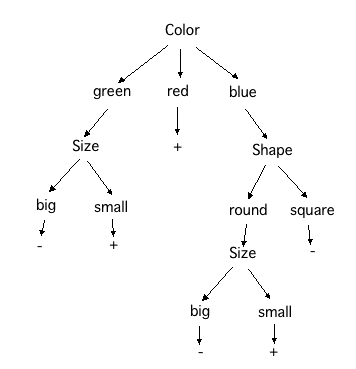

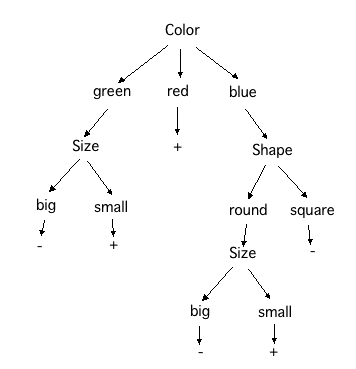

- A decision tree is a tree in which each non-leaf node

has associated with it an attribute (feature), each leaf node

has associated with it a classification (+ or -), and each arc

has associated with it one of the possible values of the attribute

at the node where the arc is directed from. For example,

- Preference Bias: Ockham's Razor: The simplest explanation

that is consistent with all observations is the best. Here, that means

the smallest decision tree that correctly classifies all of the

training examples is best.

- Decision Tree Construction using a Greedy Algorithm

- Original algorithm called ID3, developed by Quinlan, 1987

- Top-down construction of the decision tree by recursively

selecting the "best attribute" to use at the current node in

the tree. Once the attribute is selected for the current node,

generate children nodes, one for each possible value of the

selected attribute. Partition the examples using the possible

values of this attribute, and assign these subsets of the examples

to the appropriate child node. Repeat for each child node until

all examples associated with a node are either all positive or

all negative.