Now let's examine the red child node, containing three examples: [1, 3, 5]. We have two remaining attributes, shape and size.

Remainder(shape) = 1/3 I(1/1, 0/1) + 2/3 I(1/2, 1/2) = 1/3 * 0 + 2/3 * 1 = 0.667 Gain(shape) = I(2/3, 1/3) - .667 = .914 - .667 = 0.247 Remainder(size) = 2/3 I(2/2, 0/2) + 1/3 I(0/1, 1/1) = 2/3 * 0 + 1/3 * 0 = 0 Gain(size) = I(2/3, 1/3) - 0 = 0.914

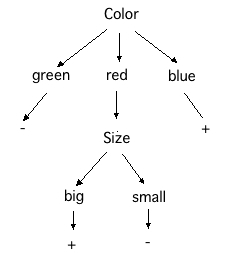

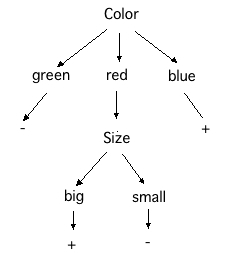

Max(.247, .914) = .914, so make size the attribute at this node. It's children are uniform in their classifications, so the final decision tree is: