Beyond Perceptrons: Multi-Layer Neural Networks

There are two kinds of multi-layer networks: Feed-forward and Recurrent.

- Perceptrons are too weak a computing model because they can only

learn linearly-separable functions. So we need to define more complex neural

networks in order to enhance their functionality.

- Multi-layer, feedforward networks generalize 1-layer networks

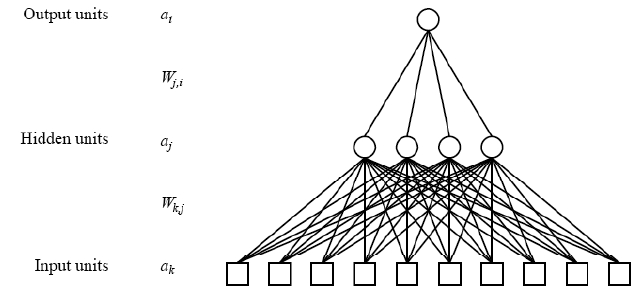

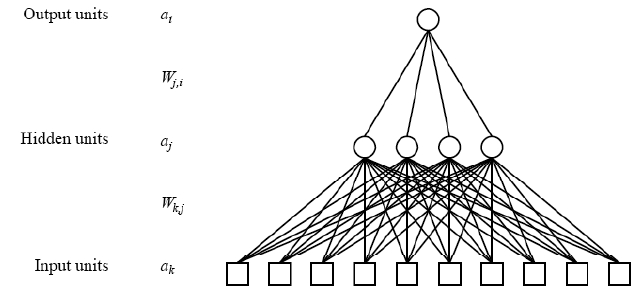

(i.e., Perceptrons) to n-layer networks as follows:

- Partition units into n+1 "layers," such that layer 0 contains the

input units, layers 1, ..., n-1 are the hidden layers,

and layer n contains the output units.

- Each unit in layer k, k=0, ..., n-1, is

connected to all of the units in layer k+1.

- Connectivity means bottom-up connections only, with no cycles,

hence the name "feedforward" nets.

- Programmer defines the number of hidden layers and the number

of units in each layer (input, hidden, and output).

- Example of a 2-layer feedforward network:

- 3-layer feedforward neural nets (i.e., nets with two hidden layers)

with an LTU at each hidden and output layer unit, can compute

arbitrary functions (hence they are universal computing devices)

although the complexity of the function is limited by the number of

units in the network.